WorkLab

·S9 E4

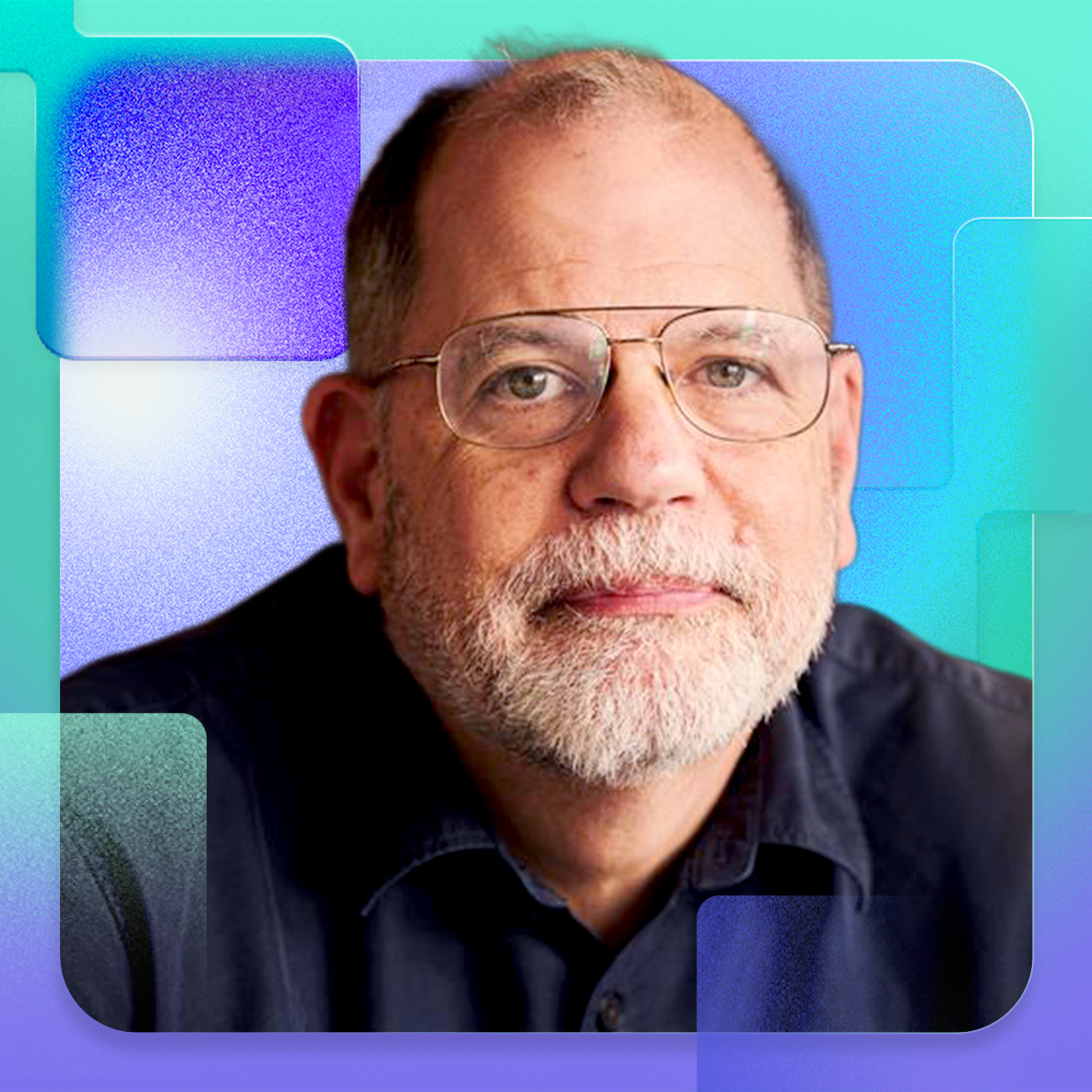

Economist Tyler Cowen on the positive side of AI negativity

Episode Transcript

1

00:00:00,458 --> 00:00:01,584

Most US jobs,

2

00:00:01,584 --> 00:00:02,502

the threat to your job,

3

00:00:02,502 --> 00:00:03,169

it’s not AI.

4

00:00:03,628 --> 00:00:04,546

It’s some other person

5

00:00:04,546 --> 00:00:06,423

who uses AI better than you do.

6

00:00:11,803 --> 00:00:12,595

Welcome to WorkLab,

7

00:00:12,595 --> 00:00:13,847

the podcast from Microsoft.

8

00:00:14,139 --> 00:00:15,223

I’m your host, Molly Wood.

9

00:00:15,473 --> 00:00:16,224

On WorkLab,

10

00:00:16,224 --> 00:00:17,767

we talk to experts about AI

11

00:00:17,767 --> 00:00:19,144

and the future of work.

12

00:00:19,519 --> 00:00:20,812

We talk about how technology

13

00:00:20,812 --> 00:00:21,688

can supercharge

14

00:00:21,688 --> 00:00:22,981

human capabilities,

15

00:00:22,981 --> 00:00:25,525

how it can transform entire organizations

16

00:00:25,525 --> 00:00:26,735

and turn them into

17

00:00:26,735 --> 00:00:28,611

what we like to call Frontier Firms.

18

00:00:28,862 --> 00:00:30,655

Today, we’re joined by Tyler Cowen.

19

00:00:30,655 --> 00:00:32,282

Tyler is the Holbert L. Harris

20

00:00:32,282 --> 00:00:33,199

Chair of Economics

21

00:00:33,199 --> 00:00:34,701

at George Mason University,

22

00:00:34,909 --> 00:00:36,661

author and deeply influential

23

00:00:36,661 --> 00:00:38,329

public thinker who co-writes

24

00:00:38,329 --> 00:00:39,748

the Marginal Revolution blog,

25

00:00:40,040 --> 00:00:40,665

and hosts the

26

00:00:40,665 --> 00:00:42,834

‘Conversations with Tyler’ podcast.

27

00:00:43,168 --> 00:00:44,252

Today, he’s going to endure

28

00:00:44,252 --> 00:00:45,420

being interviewed

29

00:00:45,420 --> 00:00:47,005

on the other side of the microphone.

30

00:00:47,005 --> 00:00:47,881

In the past few years,

31

00:00:47,881 --> 00:00:48,673

he has been tracking

32

00:00:48,673 --> 00:00:49,883

how generative AI

33

00:00:49,883 --> 00:00:51,384

is impacting the economy,

34

00:00:51,384 --> 00:00:53,470

labor markets, and institutions.

35

00:00:53,720 --> 00:00:54,554

His writing explores

36

00:00:54,554 --> 00:00:56,014

how technology is redefining

37

00:00:56,014 --> 00:00:57,640

productivity, creativity,

38

00:00:57,640 --> 00:00:58,850

and the skills that will matter

39

00:00:58,850 --> 00:00:59,809

most in the years ahead.

40

00:01:00,101 --> 00:01:01,728

Tyler, thanks for joining me on WorkLab.

41

00:01:02,020 --> 00:01:03,438

Molly, happy to be here.

42

00:01:03,772 --> 00:01:06,149

Okay, so I want to ask you first about

43

00:01:06,691 --> 00:01:08,818

kind of a shift from you, actually,

44

00:01:08,818 --> 00:01:11,279

your 2011 book, “The Great Stagnation”

45

00:01:11,279 --> 00:01:12,697

got you this kind of reputation

46

00:01:12,697 --> 00:01:15,825

as a skeptic, a technological pessimist.

47

00:01:16,201 --> 00:01:17,077

You characterize the US

48

00:01:17,077 --> 00:01:19,412

as being stuck in an innovative slump,

49

00:01:19,412 --> 00:01:21,164

and today

50

00:01:21,164 --> 00:01:21,831

you’re a little bit

51

00:01:21,831 --> 00:01:23,666

more of an energetic optimist about tech,

52

00:01:23,666 --> 00:01:24,375

I would say.

53

00:01:24,584 --> 00:01:25,668

Do you think that’s fair?

54

00:01:25,668 --> 00:01:26,419

And if so,

55

00:01:26,419 --> 00:01:27,670

what flipped that switch for you?

56

00:01:28,046 --> 00:01:28,671

That’s fair.

57

00:01:28,671 --> 00:01:30,381

But keep in mind, in my 2011

58

00:01:30,381 --> 00:01:31,925

book, “The Great Stagnation,”

59

00:01:31,925 --> 00:01:32,842

I predicted we

60

00:01:32,842 --> 00:01:33,343

would get out of

61

00:01:33,343 --> 00:01:34,302

the great stagnation

62

00:01:34,302 --> 00:01:35,804

in the next 20 years.

63

00:01:35,804 --> 00:01:37,972

And my book after that, “Average is Over,”

64

00:01:37,972 --> 00:01:39,349

I think that’s 2013,

65

00:01:39,766 --> 00:01:41,059

was saying AI is what’s

66

00:01:41,059 --> 00:01:42,227

going to pull us out.

67

00:01:42,227 --> 00:01:43,186

So I think my view’s

68

00:01:43,186 --> 00:01:44,687

been pretty consistent

69

00:01:44,687 --> 00:01:46,523

and I’m proud of the predictions

70

00:01:46,523 --> 00:01:47,857

in the earlier book.

71

00:01:47,857 --> 00:01:49,150

And we’re finally at the point

72

00:01:49,150 --> 00:01:50,151

where it’s happening.

73

00:01:50,151 --> 00:01:53,154

I would say biomedical advances and AI,

74

00:01:53,154 --> 00:01:55,490

which are now also meshing together,

75

00:01:55,490 --> 00:01:57,408

mean we’re back to real progress again.

76

00:01:57,408 --> 00:01:58,493

It’s fantastic.

77

00:01:58,493 --> 00:01:59,369

The funny thing is,

78

00:01:59,369 --> 00:02:00,703

people don’t always like it.

79

00:02:00,703 --> 00:02:02,038

They actually prefer

80

00:02:02,038 --> 00:02:03,456

the world of stagnation.

81

00:02:03,873 --> 00:02:04,958

Ooh, say more about that.

82

00:02:05,500 --> 00:02:07,168

It’s more predictable.

83

00:02:07,168 --> 00:02:08,169

In any given year,

84

00:02:08,169 --> 00:02:09,963

your income may not go up as much,

85

00:02:09,963 --> 00:02:10,880

but you know what your job

86

00:02:10,880 --> 00:02:11,548

will look like

87

00:02:11,548 --> 00:02:13,299

for the next 10 or 20 years,

88

00:02:13,550 --> 00:02:15,218

and that’s comforting to people.

89

00:02:15,343 --> 00:02:16,427

If I think of my own job,

90

00:02:16,427 --> 00:02:18,096

university professor,

91

00:02:18,096 --> 00:02:19,389

I started thinking about it

92

00:02:19,389 --> 00:02:20,557

when I was 14.

93

00:02:20,557 --> 00:02:21,933

Now I’m 63.

94

00:02:21,933 --> 00:02:23,810

That’s almost 50 years.

95

00:02:23,810 --> 00:02:25,687

What I do has changed a bit,

96

00:02:25,687 --> 00:02:26,771

but it’s the same job

97

00:02:26,771 --> 00:02:28,189

I thought I was getting.

98

00:02:28,189 --> 00:02:29,566

And that will not be true

99

00:02:29,566 --> 00:02:31,693

for many people in the coming generation.

100

00:02:31,943 --> 00:02:33,069

Right. That is a fair point.

101

00:02:33,069 --> 00:02:34,445

We are going to talk a fair bit

102

00:02:34,445 --> 00:02:35,613

about change management

103

00:02:35,613 --> 00:02:36,656

because as you point out,

104

00:02:36,656 --> 00:02:39,075

we are in a moment of extreme change.

105

00:02:39,534 --> 00:02:40,743

What did you see then that made

106

00:02:40,743 --> 00:02:41,619

you think AI would be

107

00:02:41,619 --> 00:02:42,954

the thing that pulled us out?

108

00:02:42,954 --> 00:02:44,622

And what do you think

109

00:02:44,622 --> 00:02:45,748

about where we are now?

110

00:02:46,040 --> 00:02:47,250

When I was very young,

111

00:02:47,250 --> 00:02:48,459

I was a chess player,

112

00:02:48,459 --> 00:02:49,502

and I saw chess-playing

113

00:02:49,502 --> 00:02:51,546

computers back in the 1970s.

114

00:02:51,546 --> 00:02:52,714

And they were terrible.

115

00:02:52,714 --> 00:02:53,590

But I saw the pace

116

00:02:53,590 --> 00:02:54,841

at which they advanced.

117

00:02:54,841 --> 00:02:56,634

And by 1997,

118

00:02:56,634 --> 00:02:59,053

you have Deep Blue beating Garry Kasparov

119

00:02:59,053 --> 00:03:00,430

and being the strongest

120

00:03:00,430 --> 00:03:02,307

chess-playing entity in the world.

121

00:03:02,599 --> 00:03:04,017

What chess players know is

122

00:03:04,017 --> 00:03:05,518

how much intuition is in chess.

123

00:03:05,518 --> 00:03:07,228

It’s not mainly calculation.

124

00:03:07,228 --> 00:03:08,605

So if you’ve seen computers

125

00:03:08,605 --> 00:03:09,856

advance in chess,

126

00:03:09,856 --> 00:03:11,941

you know they can handle intuition,

127

00:03:11,941 --> 00:03:12,692

which is something

128

00:03:12,692 --> 00:03:14,652

a lot of people at the time didn’t see.

129

00:03:14,652 --> 00:03:15,528

But it’s interesting

130

00:03:15,528 --> 00:03:17,488

that myself, Kasparov,

131

00:03:17,488 --> 00:03:18,781

who is a chess player,

132

00:03:18,781 --> 00:03:20,283

and Ken Rogoff, an economist

133

00:03:20,283 --> 00:03:22,202

and also a champion chess player,

134

00:03:22,202 --> 00:03:23,453

we all were very early

135

00:03:23,453 --> 00:03:24,871

to be bullish on AI,

136

00:03:24,871 --> 00:03:26,247

and it’s all for the same reason.

137

00:03:26,247 --> 00:03:27,123

The link to chess.

138

00:03:27,582 --> 00:03:28,625

Well,

139

00:03:28,625 --> 00:03:30,084

I mean, that is a conversation

140

00:03:30,084 --> 00:03:32,212

I would like to have for an hour,

141

00:03:32,212 --> 00:03:32,712

but won’t.

142

00:03:33,379 --> 00:03:34,464

Let’s fast forward to

143

00:03:34,464 --> 00:03:37,425

roughly today or around 2022

144

00:03:37,425 --> 00:03:40,220

when certainly AI had existed

145

00:03:40,220 --> 00:03:41,012

for a long time before,

146

00:03:41,012 --> 00:03:42,263

but generative AI

147

00:03:42,263 --> 00:03:43,765

then has created

148

00:03:43,765 --> 00:03:45,433

this sort of new tsunami.

149

00:03:46,100 --> 00:03:47,227

How would you describe

150

00:03:47,227 --> 00:03:49,270

the economic impact

151

00:03:49,812 --> 00:03:51,689

now, since its introduction?

152

00:03:52,106 --> 00:03:53,858

Well, right now it’s still very small.

153

00:03:53,858 --> 00:03:54,984

If you’re a programmer.

154

00:03:54,984 --> 00:03:56,694

It’s had a big impact on you.

155

00:03:56,694 --> 00:03:57,862

If you’re good at using it,

156

00:03:57,862 --> 00:03:59,364

it does a lot of your work.

157

00:03:59,364 --> 00:04:00,907

It makes it harder for some

158

00:04:00,949 --> 00:04:02,492

maybe junior-level programmers

159

00:04:02,492 --> 00:04:03,451

to get hired.

160

00:04:03,826 --> 00:04:04,827

Most of the economy,

161

00:04:04,827 --> 00:04:06,120

I would say it saves people

162

00:04:06,120 --> 00:04:07,121

a lot of time.

163

00:04:07,121 --> 00:04:08,706

It’s treated as an add on.

164

00:04:08,706 --> 00:04:10,416

It’s turned into leisure work

165

00:04:10,416 --> 00:04:12,085

that might have taken you three hours.

166

00:04:12,085 --> 00:04:13,628

It’s done in five minutes.

167

00:04:13,628 --> 00:04:15,255

But in terms of GDP growth,

168

00:04:15,255 --> 00:04:16,506

productivity growth,

169

00:04:16,506 --> 00:04:17,548

we’re not there yet.

170

00:04:17,882 --> 00:04:20,134

Let’s stay on this this line of

171

00:04:20,134 --> 00:04:21,344

of where we are and where we’re going.

172

00:04:21,344 --> 00:04:22,428

Because certainly

173

00:04:22,428 --> 00:04:23,763

it is the way of tech leaders

174

00:04:23,763 --> 00:04:24,555

to predict

175

00:04:24,555 --> 00:04:25,682

an immediate

176

00:04:25,682 --> 00:04:26,808

productivity boom

177

00:04:26,808 --> 00:04:28,518

from any new technology.

178

00:04:28,643 --> 00:04:31,604

Some economic experts predicted the same.

179

00:04:31,688 --> 00:04:32,939

Do you think the impact

180

00:04:32,939 --> 00:04:35,900

is, in fact, slower than

181

00:04:35,900 --> 00:04:36,651

predicted,

182

00:04:36,651 --> 00:04:38,903

or was this also part of the chess game?

183

00:04:38,903 --> 00:04:39,112

or was this also part of the chess game?

184

00:04:39,112 --> 00:04:41,030

It depends who’s doing the prediction,

185

00:04:41,030 --> 00:04:41,906

but I think we need

186

00:04:41,906 --> 00:04:43,992

a whole new generation of firms

187

00:04:43,992 --> 00:04:45,451

and institutions.

188

00:04:45,451 --> 00:04:47,620

If you take currently existing companies

189

00:04:47,620 --> 00:04:49,706

or nonprofits or universities

190

00:04:49,706 --> 00:04:50,540

and try to get them

191

00:04:50,540 --> 00:04:53,084

to reorganize themselves around AI,

192

00:04:53,084 --> 00:04:54,502

mostly you will fail.

193

00:04:54,711 --> 00:04:56,629

I mean, think back to the earlier years

194

00:04:56,629 --> 00:04:58,381

of the American auto industry.

195

00:04:58,381 --> 00:04:59,757

Toyota is a kind of threat

196

00:04:59,757 --> 00:05:00,925

to General Motors.

197

00:05:00,925 --> 00:05:02,302

Try to get General Motors

198

00:05:02,302 --> 00:05:04,429

to do the good things Toyota was doing.

199

00:05:04,429 --> 00:05:06,806

That’s arguably a much simpler problem,

200

00:05:06,806 --> 00:05:08,308

but mostly they failed.

201

00:05:08,308 --> 00:05:10,101

And we just didn’t adjust in time.

202

00:05:10,101 --> 00:05:11,686

So adjusting to AI,

203

00:05:11,686 --> 00:05:14,522

which is a much more radical change,

204

00:05:14,522 --> 00:05:17,275

you need startups, which will do things

205

00:05:17,275 --> 00:05:18,735

like supply legal services

206

00:05:18,735 --> 00:05:21,112

or medical diagnosis or whatever.

207

00:05:21,112 --> 00:05:22,071

And over time

208

00:05:22,071 --> 00:05:23,031

they will cycle through

209

00:05:23,031 --> 00:05:25,700

and generationally become dominant firms.

210

00:05:25,908 --> 00:05:27,618

But that could take 20 years or more.

211

00:05:27,618 --> 00:05:28,870

It will happen bit by bit,

212

00:05:28,870 --> 00:05:31,164

piece by piece, step by step.

213

00:05:31,164 --> 00:05:31,372

Yeah.

214

00:05:31,372 --> 00:05:32,206

This is something you’ve written

215

00:05:32,206 --> 00:05:32,540

about that.

216

00:05:32,540 --> 00:05:34,208

Institutions specifically

217

00:05:34,208 --> 00:05:35,543

these, you know, universities,

218

00:05:35,543 --> 00:05:36,461

big businesses,

219

00:05:36,461 --> 00:05:37,420

governments

220

00:05:37,420 --> 00:05:40,340

are the real bottleneck to AI progress.

221

00:05:40,423 --> 00:05:41,799

Do you think that it is possible

222

00:05:41,799 --> 00:05:43,468

for a legacy institution

223

00:05:43,468 --> 00:05:44,761

to reinvent itself

224

00:05:44,761 --> 00:05:45,553

as a Frontier Firm,

225

00:05:45,553 --> 00:05:46,763

or do we have to wait for this startup

226

00:05:46,763 --> 00:05:48,056

cycle to play out?

227

00:05:48,556 --> 00:05:50,308

I think we mostly have to wait.

228

00:05:50,308 --> 00:05:51,642

I think we’ll be surprised

229

00:05:51,642 --> 00:05:53,436

that some companies will do it.

230

00:05:53,436 --> 00:05:54,479

They may be companies

231

00:05:54,479 --> 00:05:55,813

which are founder-led,

232

00:05:55,813 --> 00:05:57,732

where the founder can storm into the room

233

00:05:57,732 --> 00:05:59,233

and just say, “We’re going to do this,”

234

00:05:59,233 --> 00:06:01,694

and no one can challenge that logic.

235

00:06:01,944 --> 00:06:03,446

But it’s just not going to be

236

00:06:03,446 --> 00:06:04,864

that many players in the game.

237

00:06:04,864 --> 00:06:07,450

So I think it will be painful.

238

00:06:07,450 --> 00:06:09,660

Hardest of all, with nonprofits

239

00:06:09,660 --> 00:06:11,162

and especially universities

240

00:06:11,162 --> 00:06:12,080

and governments,

241

00:06:12,538 --> 00:06:16,042

the IRS still gets millions of faxes

242

00:06:16,042 --> 00:06:18,336

every year and uses fax machines.

243

00:06:18,336 --> 00:06:19,670

So you’re telling the IRS,

244

00:06:19,670 --> 00:06:21,422

“Organize everything around AI.”

245

00:06:21,756 --> 00:06:23,424

How about telling the IRS,

246

00:06:23,424 --> 00:06:24,592

“Use the internet more.”

247

00:06:24,592 --> 00:06:26,803

We haven’t even quite managed to do that.

248

00:06:26,803 --> 00:06:28,096

So yes, it will be slow.

249

00:06:28,346 --> 00:06:28,638

Yeah.

250

00:06:28,930 --> 00:06:30,431

It seems like everybody sort of

251

00:06:30,431 --> 00:06:31,599

feels like we’re in a bubble

252

00:06:31,599 --> 00:06:32,475

and we’re headed for a crash,

253

00:06:32,475 --> 00:06:33,935

and it’s going to come any minute now.

254

00:06:35,103 --> 00:06:36,312

Do you agree?

255

00:06:36,604 --> 00:06:37,480

I would make this point.

256

00:06:37,480 --> 00:06:38,064

Right now

257

00:06:38,064 --> 00:06:39,524

tech sector earnings

258

00:06:39,524 --> 00:06:41,067

are higher than tech sector

259

00:06:41,067 --> 00:06:42,819

CapEx expenditures.

260

00:06:42,819 --> 00:06:43,319

That’s good.

261

00:06:43,319 --> 00:06:44,278

It means they can afford

262

00:06:44,278 --> 00:06:45,571

to spend the money.

263

00:06:45,571 --> 00:06:46,781

Do I think every big

264

00:06:46,781 --> 00:06:48,574

or even small tech company

265

00:06:48,658 --> 00:06:50,076

will earn more because of this?

266

00:06:50,076 --> 00:06:50,326

No.

267

00:06:50,326 --> 00:06:51,119

I think a lot of them

268

00:06:51,119 --> 00:06:52,286

will lose a lot of money.

269

00:06:52,412 --> 00:06:53,413

It’s like the railroads.

270

00:06:53,413 --> 00:06:55,248

We did overbuild the railroads,

271

00:06:55,248 --> 00:06:56,874

but the railroads didn’t go away.

272

00:06:56,874 --> 00:06:58,835

In fact, they transformed the world.

273

00:06:58,835 --> 00:07:00,378

So it’s not like Pets.com,

274

00:07:00,378 --> 00:07:01,421

where one day it’s gone

275

00:07:01,421 --> 00:07:03,506

and you say, “Boo hoo. It was a bubble.”

276

00:07:03,506 --> 00:07:05,675

AI is here to last. It’s going to work.

277

00:07:05,675 --> 00:07:06,676

There’ll be major winners.

278

00:07:06,676 --> 00:07:08,177

There’ll be a lot of losers.

279

00:07:08,177 --> 00:07:09,679

A very common story.

280

00:07:09,679 --> 00:07:10,805

If you want to call that a bubble

281

00:07:10,805 --> 00:07:11,848

because there are losers,

282

00:07:11,848 --> 00:07:12,849

that’s fine,

283

00:07:12,849 --> 00:07:14,183

but it’s not a bubble in the sense

284

00:07:14,183 --> 00:07:16,185

that when a bubble pops, it’s gone.

285

00:07:17,270 --> 00:07:17,895

I think you could argue

286

00:07:17,895 --> 00:07:18,855

that the parallel, actually,

287

00:07:18,855 --> 00:07:20,440

the Pets.com parallel,

288

00:07:20,815 --> 00:07:22,275

might apply now,

289

00:07:22,275 --> 00:07:23,526

which is that the internet

290

00:07:23,526 --> 00:07:24,360

didn’t go anywhere.

291

00:07:24,360 --> 00:07:24,861

You know the

292

00:07:24,861 --> 00:07:26,446

this new economy didn’t go anywhere.

293

00:07:26,446 --> 00:07:29,031

We just had a little bit of a speed bump.

294

00:07:29,031 --> 00:07:30,074

And we do in fact buy

295

00:07:30,074 --> 00:07:31,492

our dog food at home online.

296

00:07:31,492 --> 00:07:32,618

And it’s delivered.

297

00:07:32,618 --> 00:07:32,869

Yeah.

298

00:07:33,786 --> 00:07:34,996

Not from Pets.com.

299

00:07:34,996 --> 00:07:36,414

Some other company, yeah.

300

00:07:36,747 --> 00:07:37,957

In an interview earlier this year,

301

00:07:37,957 --> 00:07:39,625

you suggested that colleges

302

00:07:39,625 --> 00:07:41,419

should dedicate at least a third

303

00:07:41,669 --> 00:07:43,713

of their curricula to AI literacy.

304

00:07:44,255 --> 00:07:45,631

Say more about how education

305

00:07:45,631 --> 00:07:46,507

needs to evolve

306

00:07:46,507 --> 00:07:48,176

to provide value in this era.

307

00:07:48,593 --> 00:07:49,886

To be clear, right now,

308

00:07:49,886 --> 00:07:51,345

we do not have the professors

309

00:07:51,345 --> 00:07:52,972

to teach AI literacy.

310

00:07:52,972 --> 00:07:54,849

So my suggestion is hypothetical.

311

00:07:54,849 --> 00:07:57,143

It’s not something we actually can do.

312

00:07:57,143 --> 00:07:59,645

But over the course of the next 20 years,

313

00:07:59,645 --> 00:08:01,355

basically almost every job

314

00:08:01,355 --> 00:08:03,232

I think even gardener, carpenter,

315

00:08:03,232 --> 00:08:04,358

physical jobs.

316

00:08:04,442 --> 00:08:05,485

If you’re in sports,

317

00:08:05,485 --> 00:08:08,029

San Antonio Spurs are claiming they’re now

318

00:08:08,029 --> 00:08:11,032

the AI-ready NBA basketball team.

319

00:08:11,073 --> 00:08:13,493

So if all jobs will require AI,

320

00:08:13,493 --> 00:08:14,535

we need to be teaching

321

00:08:14,535 --> 00:08:17,121

and training everyone in AI skills.

322

00:08:17,121 --> 00:08:17,830

It’s quite hard

323

00:08:17,830 --> 00:08:20,166

because AI now is changing so rapidly.

324

00:08:20,166 --> 00:08:21,918

You could learn something six months ago

325

00:08:21,918 --> 00:08:23,669

and then you’ve got to learn a new thing.

326

00:08:23,669 --> 00:08:25,213

So it will take a lot of time,

327

00:08:25,213 --> 00:08:26,422

and we need to teach people

328

00:08:26,422 --> 00:08:27,673

how to stay current.

329

00:08:27,673 --> 00:08:29,926

That itself changes.

330

00:08:29,926 --> 00:08:32,386

But what we need to do, in my opinion,

331

00:08:32,386 --> 00:08:34,096

is have writing

332

00:08:34,096 --> 00:08:34,847

be prominent

333

00:08:34,847 --> 00:08:36,974

in regular education at all levels,

334

00:08:36,974 --> 00:08:38,017

and I mean in-person,

335

00:08:38,017 --> 00:08:39,477

face-to-face writing,

336

00:08:39,477 --> 00:08:40,603

not take home homework

337

00:08:40,603 --> 00:08:42,146

where GPT does it for you,

338

00:08:42,772 --> 00:08:45,733

writing, numeracy, and AI.

339

00:08:45,858 --> 00:08:47,652

Those are the things we need to learn.

340

00:08:47,652 --> 00:08:48,319

The other things

341

00:08:48,319 --> 00:08:50,488

you can mostly learn from AI.

342

00:08:50,488 --> 00:08:51,280

If you can deal with

343

00:08:51,280 --> 00:08:52,823

numbers mentally, right?

344

00:08:52,907 --> 00:08:54,367

That means you can think well

345

00:08:54,408 --> 00:08:55,993

and work with the AIs,

346

00:08:55,993 --> 00:08:57,245

you’re in great shape.

347

00:08:57,370 --> 00:08:58,913

We need to have further training

348

00:08:58,913 --> 00:09:00,748

in all kinds of specialized skills.

349

00:09:00,748 --> 00:09:02,250

Say you want to be a chemist.

350

00:09:02,250 --> 00:09:02,917

Well, what do you need to

351

00:09:02,917 --> 00:09:04,168

learn about the lab?

352

00:09:04,168 --> 00:09:06,045

But you start off by emphasizing

353

00:09:06,045 --> 00:09:06,921

those three things:

354

00:09:06,921 --> 00:09:09,382

AI, writing, thinking, and math.

355

00:09:10,925 --> 00:09:11,592

You know, we talked a

356

00:09:11,592 --> 00:09:12,552

lot about Frontier Firms,

357

00:09:12,552 --> 00:09:13,219

but you’re talking about

358

00:09:13,219 --> 00:09:14,679

Frontier universities in a way.

359

00:09:14,679 --> 00:09:15,596

If you’re saying that there are no

360

00:09:15,596 --> 00:09:17,056

there’s simply are not there now.

361

00:09:17,056 --> 00:09:17,723

Exactly.

362

00:09:18,140 --> 00:09:19,850

Arizona State is maybe

363

00:09:19,850 --> 00:09:21,352

the most forward-thinking.

364

00:09:21,978 --> 00:09:22,812

I have some hopes

365

00:09:22,812 --> 00:09:24,230

for University of Austin.

366

00:09:24,230 --> 00:09:25,982

I think they will be trying.

367

00:09:26,482 --> 00:09:28,276

But, you know, personnel is policy,

368

00:09:28,276 --> 00:09:29,318

as they say.

369

00:09:29,318 --> 00:09:30,319

And in universities,

370

00:09:30,319 --> 00:09:32,196

people feel threatened by AI.

371

00:09:32,196 --> 00:09:34,031

They know it will change their jobs.

372

00:09:34,031 --> 00:09:35,992

It doesn’t have to make them worse.

373

00:09:35,992 --> 00:09:37,201

But I see very,

374

00:09:37,201 --> 00:09:37,827

very slow

375

00:09:37,827 --> 00:09:39,787

speeds of adaptation and adjustment.

376

00:09:40,997 --> 00:09:41,914

Adoption is just

377

00:09:41,914 --> 00:09:43,457

such a fundamental question in adoption,

378

00:09:43,457 --> 00:09:45,084

and I think the word you just used,

379

00:09:45,084 --> 00:09:46,586

which is adaptation.

380

00:09:46,669 --> 00:09:48,713

There is this kind of mindset shift,

381

00:09:48,754 --> 00:09:50,214

habit shift, skills shift,

382

00:09:50,214 --> 00:09:51,340

even within workplaces

383

00:09:51,340 --> 00:09:53,509

that already have some

384

00:09:53,509 --> 00:09:55,511

AI collaborators or digital employees.

385

00:09:55,511 --> 00:09:56,804

Is that fair to say?

386

00:09:56,804 --> 00:09:58,055

Absolutely fair to say.

387

00:09:58,055 --> 00:09:58,848

It’s hard

388

00:09:59,140 --> 00:10:00,766

for just about any institution

389

00:10:00,766 --> 00:10:01,976

to make the adjustment,

390

00:10:01,976 --> 00:10:03,978

including major AI companies.

391

00:10:03,978 --> 00:10:05,146

They don’t do most of their own

392

00:10:05,146 --> 00:10:07,565

internal tasks by AI, right?

393

00:10:07,565 --> 00:10:08,316

You would think, well,

394

00:10:08,316 --> 00:10:09,650

it should be easy for them.

395

00:10:09,650 --> 00:10:10,484

And, you know,

396

00:10:10,484 --> 00:10:11,986

I think they’ll manage over time,

397

00:10:11,986 --> 00:10:13,279

but it’s not easy for anyone.

398

00:10:14,030 --> 00:10:14,905

You’re saying,

399

00:10:14,905 --> 00:10:16,032

and there have also been

400

00:10:16,032 --> 00:10:17,617

a number of studies saying gen AI

401

00:10:17,617 --> 00:10:19,994

has had minimal impact

402

00:10:19,994 --> 00:10:21,495

on the labor market,

403

00:10:21,495 --> 00:10:22,997

but we are also seeing headlines

404

00:10:22,997 --> 00:10:25,124

about large-scale job cuts

405

00:10:25,124 --> 00:10:26,083

that are being framed

406

00:10:26,083 --> 00:10:28,377

as the result of embracing AI.

407

00:10:28,377 --> 00:10:30,296

Do you buy that framing,

408

00:10:30,296 --> 00:10:33,090

or is there a large corporate disconnect?

409

00:10:33,299 --> 00:10:35,301

I’m not persuaded by the framing.

410

00:10:35,301 --> 00:10:36,719

There could be some truth to it.

411

00:10:36,719 --> 00:10:38,846

I don’t think we know how much yet.

412

00:10:38,846 --> 00:10:39,972

I think there’s a good chance

413

00:10:39,972 --> 00:10:41,557

we’re headed for a recession

414

00:10:41,724 --> 00:10:43,684

for non-AI related reasons.

415

00:10:44,060 --> 00:10:45,895

And then of course, people get laid off.

416

00:10:46,145 --> 00:10:48,105

AI is an easy thing to blame.

417

00:10:48,105 --> 00:10:50,191

Oh, it’s not me, it’s the AI.

418

00:10:50,441 --> 00:10:52,485

What I suspect will happen

419

00:10:52,485 --> 00:10:54,654

is as AI becomes more important,

420

00:10:54,654 --> 00:10:56,447

new hirings will slow down.

421

00:10:56,447 --> 00:10:57,823

I’m not sure that many people

422

00:10:57,823 --> 00:10:59,950

would be fired in the US.

423

00:10:59,950 --> 00:11:01,827

If it’s a call center in the Philippines

424

00:11:01,827 --> 00:11:03,245

or in Hyderabad, India

425

00:11:03,412 --> 00:11:04,205

there, I can see

426

00:11:04,205 --> 00:11:05,122

people being laid off

427

00:11:05,122 --> 00:11:07,249

in the early stages because of AI.

428

00:11:07,249 --> 00:11:08,584

But most US jobs,

429

00:11:08,584 --> 00:11:09,543

the threat to your job,

430

00:11:09,543 --> 00:11:10,419

it’s not AI.

431

00:11:10,711 --> 00:11:11,587

It’s some of the person

432

00:11:11,587 --> 00:11:13,547

who uses AI better than you do.

433

00:11:13,547 --> 00:11:14,465

And there’s going to be a lot of

434

00:11:14,465 --> 00:11:15,716

job turnover due to that.

435

00:11:16,258 --> 00:11:18,761

Do we risk making AI into the boogeyman?

436

00:11:19,220 --> 00:11:21,013

We’re making AI into the boogeyman

437

00:11:21,013 --> 00:11:21,514

right now.

438

00:11:21,514 --> 00:11:22,431

Just go to Twitter

439

00:11:22,431 --> 00:11:23,933

and you see people upset

440

00:11:23,933 --> 00:11:25,518

at all sorts of people, companies,

441

00:11:25,518 --> 00:11:26,560

AI systems.

442

00:11:26,560 --> 00:11:27,395

My goodness,

443

00:11:27,395 --> 00:11:28,479

they’re so negative

444

00:11:28,479 --> 00:11:29,689

about one of humanity’s

445

00:11:29,689 --> 00:11:31,399

most marvelous inventions.

446

00:11:32,608 --> 00:11:33,984

Is what they’re saying on Twitter,

447

00:11:33,984 --> 00:11:34,735

or your response?

448

00:11:34,735 --> 00:11:35,778

What they’re saying on Twitter

449

00:11:35,778 --> 00:11:36,779

is so negative.

450

00:11:37,071 --> 00:11:38,989

There’s so many villains in the story,

451

00:11:38,989 --> 00:11:40,491

and we’ve done something amazing,

452

00:11:40,491 --> 00:11:41,617

but that’s common.

453

00:11:41,826 --> 00:11:43,327

People actually do enjoy stagnation

454

00:11:43,327 --> 00:11:44,912

and change is very scary.

455

00:11:44,912 --> 00:11:45,538

That’s right.

456

00:11:45,955 --> 00:11:48,666

And you’re talking about a realignment of

457

00:11:48,666 --> 00:11:50,000

business, education,

458

00:11:50,000 --> 00:11:51,252

and culture

459

00:11:51,252 --> 00:11:53,796

to try to integrate this technology

460

00:11:54,004 --> 00:11:55,256

like is it that is it that disruptive

461

00:11:55,381 --> 00:11:58,050

that that’s what we have to consider?

462

00:11:58,050 --> 00:11:58,968

I believe so.

463

00:11:58,968 --> 00:12:00,261

And you use the word scary.

464

00:12:00,261 --> 00:12:00,928

That’s correct.

465

00:12:00,928 --> 00:12:03,639

I would also say it can be demoralizing.

466

00:12:03,639 --> 00:12:06,642

So if I ask the best AI systems questions

467

00:12:06,642 --> 00:12:07,852

about economics,

468

00:12:07,852 --> 00:12:09,103

usually their answers

469

00:12:09,103 --> 00:12:10,730

are better than my answers.

470

00:12:10,730 --> 00:12:12,148

Now how should I feel about that?

471

00:12:13,149 --> 00:12:14,066

I would say I’m more

472

00:12:14,066 --> 00:12:15,943

delighted than upset,

473

00:12:15,943 --> 00:12:16,861

but I understand

474

00:12:16,861 --> 00:12:17,820

when people don’t like it.

475

00:12:17,820 --> 00:12:19,029

I’m like, “Whoa.

476

00:12:19,029 --> 00:12:20,906

What am I good for now?”

477

00:12:20,906 --> 00:12:22,616

And I have answers to that question,

478

00:12:22,616 --> 00:12:24,577

but it requires adjustment from me.

479

00:12:24,952 --> 00:12:25,619

Right.

480

00:12:26,036 --> 00:12:28,038

So then now put yourself into the context

481

00:12:28,038 --> 00:12:29,790

of somebody at work

482

00:12:29,790 --> 00:12:30,916

who is experiencing this.

483

00:12:31,208 --> 00:12:33,377

How do you manage for

484

00:12:33,377 --> 00:12:35,880

those potential feelings of inadequacy

485

00:12:35,880 --> 00:12:37,757

or the change in

486

00:12:38,382 --> 00:12:39,884

job style and behavior

487

00:12:40,217 --> 00:12:43,345

that will be necessary to adapt?

488

00:12:44,138 --> 00:12:45,765

I don’t think we know yet.

489

00:12:45,765 --> 00:12:46,599

This is a reason

490

00:12:46,599 --> 00:12:48,058

why I’m bullish on startups

491

00:12:48,058 --> 00:12:48,934

more generally.

492

00:12:48,934 --> 00:12:49,935

If you have a startup

493

00:12:49,935 --> 00:12:50,853

and you tell everyone

494

00:12:50,853 --> 00:12:51,854

you’re hiring,

495

00:12:51,854 --> 00:12:53,105

“Well, you need to know AI,

496

00:12:53,105 --> 00:12:55,024

we’re going to run things around AI,”

497

00:12:55,024 --> 00:12:57,193

the morale problem is much lower.

498

00:12:57,401 --> 00:12:58,861

Tobi Lütke looked at at Shopify,

499

00:12:58,861 --> 00:13:00,988

tried to do this with this famous memo.

500

00:13:00,988 --> 00:13:03,115

He said, “Anyone we hire at Shopify,

501

00:13:03,115 --> 00:13:03,949

from here on out,

502

00:13:03,949 --> 00:13:06,035

has to be very good with AI.”

503

00:13:06,035 --> 00:13:07,328

That was an excellent move.

504

00:13:07,703 --> 00:13:08,329

But it’s hard to

505

00:13:08,329 --> 00:13:09,288

see that through

506

00:13:09,288 --> 00:13:10,790

and then actually reward the people

507

00:13:10,790 --> 00:13:12,082

who do a good job of it.

508

00:13:12,082 --> 00:13:13,334

When a lot of your people,

509

00:13:13,417 --> 00:13:14,710

they’re probably using

510

00:13:14,710 --> 00:13:16,128

something like ChatGPT

511

00:13:16,128 --> 00:13:17,421

in their daily lives,

512

00:13:17,421 --> 00:13:18,631

but they haven’t really

513

00:13:18,839 --> 00:13:20,132

adjusted to the fact

514

00:13:20,132 --> 00:13:21,217

that it will dominate

515

00:13:21,217 --> 00:13:22,802

a lot of their workday.

516

00:13:23,260 --> 00:13:24,845

Let’s talk about sectors for a minute.

517

00:13:25,179 --> 00:13:26,806

What are the sectors

518

00:13:26,806 --> 00:13:28,265

you think in addition to

519

00:13:28,265 --> 00:13:29,225

maybe the types of work

520

00:13:29,225 --> 00:13:30,559

that are most likely to feel AI’s effects

521

00:13:30,559 --> 00:13:32,102

that are most likely to feel AI’s effects

522

00:13:32,102 --> 00:13:33,312

most intensely in the next,

523

00:13:33,312 --> 00:13:34,480

let’s say, year to 18 months?

524

00:13:35,439 --> 00:13:36,565

Year to 18 months.

525

00:13:36,565 --> 00:13:37,900

I think it will take

526

00:13:37,900 --> 00:13:39,485

a few more years

527

00:13:39,485 --> 00:13:41,487

than that to really matter.

528

00:13:41,487 --> 00:13:43,072

So there’s plenty of places

529

00:13:43,072 --> 00:13:44,240

like, say, law firms

530

00:13:44,240 --> 00:13:46,992

where AI is already super powerful,

531

00:13:46,992 --> 00:13:49,453

but you’re not allowed to send your query

532

00:13:49,453 --> 00:13:52,331

to someone’s foundation model out there

533

00:13:52,331 --> 00:13:53,541

in the Bay Area

534

00:13:53,541 --> 00:13:55,835

because it’s confidential information.

535

00:13:55,835 --> 00:13:56,961

So that will work

536

00:13:56,961 --> 00:13:57,419

when, say,

537

00:13:57,419 --> 00:13:59,338

a law firm on its own

538

00:13:59,338 --> 00:14:01,090

hard drive owns the whole model

539

00:14:01,090 --> 00:14:01,882

and controls it

540

00:14:01,882 --> 00:14:02,508

and doesn’t have to

541

00:14:02,508 --> 00:14:03,801

send the query and the answer

542

00:14:03,801 --> 00:14:04,718

anywhere.

543

00:14:04,927 --> 00:14:06,053

I don’t see that happening

544

00:14:06,053 --> 00:14:07,304

in the next 18 months.

545

00:14:07,304 --> 00:14:08,472

I can imagine

546

00:14:08,472 --> 00:14:10,516

it happening in the next three years.

547

00:14:10,850 --> 00:14:13,352

But right now, I think entertainment,

548

00:14:13,561 --> 00:14:15,437

the arts, graphic design,

549

00:14:15,437 --> 00:14:17,022

obviously programming.

550

00:14:17,356 --> 00:14:18,482

I think functions

551

00:14:18,482 --> 00:14:20,901

such as finance and customer service,

552

00:14:20,901 --> 00:14:22,862

they’re often pretty repetitive.

553

00:14:22,862 --> 00:14:23,362

That to me

554

00:14:23,362 --> 00:14:24,113

seems more like

555

00:14:24,113 --> 00:14:25,364

a two or three year thing.

556

00:14:25,364 --> 00:14:27,324

Not so much the next year.

557

00:14:27,324 --> 00:14:29,618

We just don’t quite seem to be there

558

00:14:29,618 --> 00:14:31,245

to have working agents

559

00:14:31,245 --> 00:14:33,122

that are sufficiently reliable

560

00:14:33,122 --> 00:14:33,914

that you would turn

561

00:14:33,914 --> 00:14:34,540

you know, the candy

562

00:14:34,540 --> 00:14:36,125

store over to them right now.

563

00:14:36,125 --> 00:14:37,167

It will come.

564

00:14:37,167 --> 00:14:38,377

I’m really quite sure.

565

00:14:38,377 --> 00:14:39,670

Less than five years,

566

00:14:39,670 --> 00:14:41,005

but not in the next year.

567

00:14:41,088 --> 00:14:42,464

Let’s talk about humans for a minute.

568

00:14:42,464 --> 00:14:43,340

In your recent writing,

569

00:14:43,340 --> 00:14:44,258

you have been exploring

570

00:14:44,258 --> 00:14:45,009

this sort of question

571

00:14:45,009 --> 00:14:46,010

about

572

00:14:46,010 --> 00:14:47,761

how AI might change

573

00:14:47,761 --> 00:14:49,305

what it means to be human.

574

00:14:49,305 --> 00:14:50,347

And we’ve talked about that

575

00:14:50,347 --> 00:14:52,474

a little bit in the context of learning.

576

00:14:52,474 --> 00:14:54,643

But what do you think humans

577

00:14:54,643 --> 00:14:58,022

most need to protect or preserve?

578

00:14:58,856 --> 00:14:59,940

You’ll need to build out

579

00:14:59,940 --> 00:15:01,859

your personal network much more.

580

00:15:01,859 --> 00:15:02,735

Everyone now

581

00:15:02,735 --> 00:15:04,695

has a perfectly written cover letter,

582

00:15:04,695 --> 00:15:06,322

so that does not distinguish you.

583

00:15:06,864 --> 00:15:07,740

Who can actually vouch

584

00:15:07,740 --> 00:15:08,282

for you,

585

00:15:08,282 --> 00:15:09,491

recommend you,

586

00:15:09,491 --> 00:15:10,951

speak to what you’ve done with them

587

00:15:10,951 --> 00:15:12,244

or for them.

588

00:15:12,244 --> 00:15:13,954

That was already super important.

589

00:15:13,954 --> 00:15:15,873

Now it’s much more important.

590

00:15:15,873 --> 00:15:16,999

I think your charisma,

591

00:15:16,999 --> 00:15:18,542

your physical presence.

592

00:15:18,542 --> 00:15:20,502

I hesitate to use the word looks

593

00:15:20,502 --> 00:15:22,004

because I don’t think simply

594

00:15:22,004 --> 00:15:23,005

being attractive looking

595

00:15:23,005 --> 00:15:25,007

is necessarily the main thing.

596

00:15:25,215 --> 00:15:26,800

But to have looks that fit

597

00:15:26,800 --> 00:15:27,843

what people think

598

00:15:27,843 --> 00:15:29,929

your looks ought to be like,

599

00:15:29,929 --> 00:15:32,348

and to be persuasive face-to-face,

600

00:15:32,681 --> 00:15:34,808

again, it’s always mattered a great deal.

601

00:15:34,808 --> 00:15:37,227

That’s just on the ascendency,

602

00:15:37,227 --> 00:15:40,189

the ability and willingness to travel,

603

00:15:40,189 --> 00:15:42,733

and some sort of interpersonal skill

604

00:15:42,733 --> 00:15:43,567

that reflects

605

00:15:43,567 --> 00:15:46,487

knowing how you can fit into a system

606

00:15:46,487 --> 00:15:48,489

and when you should defer to the AI,

607

00:15:48,489 --> 00:15:50,699

when you should not defer to the AI.

608

00:15:50,699 --> 00:15:51,867

And how can you persuade

609

00:15:51,867 --> 00:15:53,410

the people you’re working with

610

00:15:53,410 --> 00:15:55,621

to actually learn more about the AI?

611

00:15:55,621 --> 00:15:56,872

That’s a pretty tricky skill.

612

00:15:56,872 --> 00:15:58,958

We’re not used to testing for that,

613

00:15:58,958 --> 00:16:00,250

but we will need to test for it

614

00:16:00,250 --> 00:16:01,502

in the future.

615

00:16:01,502 --> 00:16:03,087

Do you mean literally test for it

616

00:16:03,087 --> 00:16:04,505

like an aptitue?

617

00:16:04,505 --> 00:16:05,255

Literally test for it. Yeah.

618

00:16:05,255 --> 00:16:06,840

I asked Sam Altman recently

619

00:16:06,840 --> 00:16:08,467

in my podcast with him,

620

00:16:08,467 --> 00:16:09,551

“Well, how do you make sure

621

00:16:09,551 --> 00:16:11,095

you’re hiring people who,

622

00:16:11,095 --> 00:16:11,887

when the time comes,

623

00:16:11,887 --> 00:16:13,889

are willing to step aside for AI

624

00:16:13,889 --> 00:16:14,515

and perform

625

00:16:14,515 --> 00:16:16,350

some other function in their jobs?”

626

00:16:16,350 --> 00:16:17,434

I don’t think any of us know

627

00:16:17,434 --> 00:16:18,352

how to do that yet.

628

00:16:18,352 --> 00:16:20,270

We’re used to asking other questions.

629

00:16:21,397 --> 00:16:22,272

I mean, certainly

630

00:16:22,272 --> 00:16:23,357

people aren’t good at doing it

631

00:16:23,565 --> 00:16:24,441

when a human comes along.

632

00:16:24,942 --> 00:16:25,609

That’s right.

633

00:16:25,609 --> 00:16:27,319

Maybe the AI is less threatening, right?

634

00:16:27,319 --> 00:16:28,946

Maybe you could sort of say, well, it is.

635

00:16:28,946 --> 00:16:29,780

It does have access

636

00:16:29,780 --> 00:16:30,990

to all the human knowledge in the world.

637

00:16:30,990 --> 00:16:32,074

So I guess

638

00:16:32,074 --> 00:16:33,617

it’s doing a better job than me.

639

00:16:33,826 --> 00:16:34,702

What will I mean, I guess on that note,

640

00:16:34,702 --> 00:16:37,496

then what will we need to let go of

641

00:16:37,496 --> 00:16:38,330

in addition to,

642

00:16:38,330 --> 00:16:39,540

it sounds like, a little bit of ego?

643

00:16:39,999 --> 00:16:41,792

I’m a university professor.

644

00:16:41,792 --> 00:16:44,211

I give students comments on their papers

645

00:16:44,211 --> 00:16:45,170

all the time.

646

00:16:45,170 --> 00:16:46,964

I now give them AI comments

647

00:16:46,964 --> 00:16:48,257

and my own comments.

648

00:16:48,257 --> 00:16:50,426

The AI comments are typically better

649

00:16:50,426 --> 00:16:51,677

than my comments,

650

00:16:51,677 --> 00:16:53,178

but my comments see things

651

00:16:53,178 --> 00:16:54,555

the AI doesn’t,

652

00:16:54,555 --> 00:16:56,140

like how the paper will play

653

00:16:56,140 --> 00:16:57,975

to certain human audiences,

654

00:16:57,975 --> 00:16:58,809

maybe what journal

655

00:16:58,809 --> 00:17:00,185

it should be published in.

656

00:17:00,644 --> 00:17:02,021

Something maybe temperamental

657

00:17:02,021 --> 00:17:02,771

about the paper

658

00:17:02,771 --> 00:17:04,732

that the AI is in a sense too

659

00:17:04,732 --> 00:17:06,525

focused on the substance,

660

00:17:06,525 --> 00:17:07,526

but on the substance,

661

00:17:07,526 --> 00:17:10,070

the AI just wipes the floor with me.

662

00:17:10,070 --> 00:17:13,032

So I need to invest more in learning

663

00:17:13,032 --> 00:17:14,450

these other skills

664

00:17:14,450 --> 00:17:16,535

and just skills of persuasion.

665

00:17:16,535 --> 00:17:18,287

How can I convince the students

666

00:17:18,287 --> 00:17:19,872

to take the AI seriously?

667

00:17:20,497 --> 00:17:21,040

Going back

668

00:17:21,040 --> 00:17:21,790

to sort of

669

00:17:21,790 --> 00:17:23,542

amplifying our essential humanness

670

00:17:23,542 --> 00:17:24,460

and making that maybe

671

00:17:24,460 --> 00:17:25,627

part of our education.

672

00:17:25,919 --> 00:17:26,962

I feel like when we say

673

00:17:26,962 --> 00:17:27,588

something like that,

674

00:17:27,588 --> 00:17:28,839

it feels like such a big lift, like,

675

00:17:28,839 --> 00:17:29,840

oh, how will we ever

676

00:17:30,049 --> 00:17:31,675

retool our education system

677

00:17:31,675 --> 00:17:32,926

to focus on humanity

678

00:17:32,926 --> 00:17:35,929

and the arts and interpersonal skills?

679

00:17:36,096 --> 00:17:37,598

But we really did used to have that.

680

00:17:37,598 --> 00:17:38,932

You know, it’s sort of fair

681

00:17:38,932 --> 00:17:42,186

to say we overemphasized on technology.

682

00:17:42,186 --> 00:17:42,811

And I mean, you’re

683

00:17:42,811 --> 00:17:43,771

an economics professor,

684

00:17:43,771 --> 00:17:46,065

but numbers are pure economics

685

00:17:46,065 --> 00:17:48,025

and data-driven decision making,

686

00:17:48,025 --> 00:17:50,652

and it feels doable to me

687

00:17:50,652 --> 00:17:51,904

that we could sort of return

688

00:17:51,904 --> 00:17:53,489

to a different educational model

689

00:17:53,489 --> 00:17:56,366

if we think the value will be there

690

00:17:56,366 --> 00:17:57,201

in the end.

691

00:17:57,451 --> 00:17:59,495

It’s not intrinsically hard to do at all.

692

00:17:59,495 --> 00:18:00,954

It’s a matter of will,

693

00:18:00,954 --> 00:18:02,122

and that the insiders

694

00:18:02,122 --> 00:18:04,708

in many institutions, schools included,

695

00:18:04,708 --> 00:18:06,710

they just don’t want to change very much.

696

00:18:06,710 --> 00:18:08,587

So those are hard nuts to crack,

697

00:18:08,587 --> 00:18:10,380

but just intrinsically,

698

00:18:10,380 --> 00:18:10,839

we’ve done it

699

00:18:10,839 --> 00:18:11,965

many times in the past,

700

00:18:11,965 --> 00:18:13,634

as you point out, and we’ll do it again.

701

00:18:13,634 --> 00:18:15,302

But like, how long and painful

702

00:18:15,302 --> 00:18:16,553

will the transition be?

703

00:18:16,553 --> 00:18:18,305

That’s what worries me.

704

00:18:18,305 --> 00:18:19,139

How long and painful

705

00:18:19,139 --> 00:18:20,474

do you think the transition will be?

706

00:18:21,433 --> 00:18:22,184

I don’t know,

707

00:18:22,184 --> 00:18:24,478

dragging everyone kicking and screaming.

708

00:18:24,478 --> 00:18:26,063

It’s decades, in my view.

709

00:18:26,063 --> 00:18:26,522

Yeah.

710

00:18:26,522 --> 00:18:27,606

But some places will adjust

711

00:18:27,606 --> 00:18:28,565

pretty quickly.

712

00:18:28,857 --> 00:18:30,275

Obviously we cannot prove a negative.

713

00:18:30,275 --> 00:18:31,235

We can’t prove an ROI

714

00:18:31,235 --> 00:18:32,778

that doesn’t yet exist.

715

00:18:32,778 --> 00:18:33,529

But it seems that

716

00:18:33,529 --> 00:18:35,239

the fundamental question,

717

00:18:35,239 --> 00:18:36,990

in terms of overcoming

718

00:18:36,990 --> 00:18:37,908

the kicking and screaming,

719

00:18:37,908 --> 00:18:38,742

if it were a toddler,

720

00:18:38,742 --> 00:18:39,535

you would say,

721

00:18:39,535 --> 00:18:41,703

“There’s a cookie at the end.”

722

00:18:41,703 --> 00:18:44,748

And I wonder how we attempt to measure

723

00:18:44,873 --> 00:18:47,876

the future value that makes this

724

00:18:47,918 --> 00:18:50,295

transition and this upheaval worthwhile?

725

00:18:50,671 --> 00:18:51,713

My personal view,

726

00:18:51,713 --> 00:18:53,799

which is speculative, to be clear,

727

00:18:53,799 --> 00:18:55,175

is that at some point

728

00:18:55,175 --> 00:18:56,301

younger people

729

00:18:56,301 --> 00:18:57,219

will basically not

730

00:18:57,219 --> 00:18:58,971

die of diseases anymore

731

00:18:58,971 --> 00:19:00,180

and will live to be 96

732

00:19:00,180 --> 00:19:02,933

or 97 years old and die of old age.

733

00:19:03,183 --> 00:19:04,434

That’s a huge cookie.

734

00:19:04,434 --> 00:19:05,477

Am I going to get that?

735

00:19:05,477 --> 00:19:06,812

I’m not counting on it.

736

00:19:06,812 --> 00:19:07,813

There’s some chance

737

00:19:07,813 --> 00:19:09,606

even money bet would say no.

738

00:19:09,857 --> 00:19:12,609

And you will have a life where

739

00:19:12,609 --> 00:19:14,236

anything you want to learn,

740

00:19:14,236 --> 00:19:16,488

you can learn very, very well.

741

00:19:16,488 --> 00:19:17,990

People will do different things

742

00:19:17,990 --> 00:19:18,699

with that.

743

00:19:18,699 --> 00:19:21,535

Some people will do cognitive offloading

744

00:19:21,535 --> 00:19:24,288

and actually become mentally lazier,

745

00:19:24,288 --> 00:19:27,416

and that will be a larger social problem.

746

00:19:27,416 --> 00:19:28,917

But the people who want to learn,

747

00:19:28,917 --> 00:19:30,794

it will just be a new paradise for them.

748

00:19:30,794 --> 00:19:32,171

I would say it is already.

749

00:19:32,171 --> 00:19:34,256

I consider myself one of those people.

750

00:19:34,256 --> 00:19:35,507

I’m happier.

751

00:19:35,507 --> 00:19:37,217

I use it to organize my travel,

752

00:19:37,217 --> 00:19:38,010

where I should eat,

753

00:19:38,010 --> 00:19:39,178

what book I should read.

754

00:19:39,178 --> 00:19:40,637

How to make sense of something in a book

755

00:19:40,637 --> 00:19:41,680

I don’t know.

756

00:19:41,680 --> 00:19:43,640

I’ll use it dozens of times every day,

757

00:19:43,640 --> 00:19:44,474

and it’s great.

758

00:19:44,641 --> 00:19:44,975

Yeah.

759

00:19:45,976 --> 00:19:46,393

Do you

760

00:19:46,393 --> 00:19:47,269

think that there’s a role

761

00:19:47,269 --> 00:19:49,521

for government or public policy,

762

00:19:49,521 --> 00:19:50,397

one way or the other,

763

00:19:50,397 --> 00:19:51,315

in smoothing

764

00:19:51,315 --> 00:19:52,774

the economic adjustment,

765

00:19:52,774 --> 00:19:55,027

let’s say, to widespread AI adoption?

766

00:19:55,569 --> 00:19:56,111

There will be.

767

00:19:56,111 --> 00:19:58,739

I’m not sure we know yet what it is.

768

00:19:58,739 --> 00:20:00,407

The immediate priority for me

769

00:20:00,407 --> 00:20:01,909

is just to get more energy

770

00:20:01,909 --> 00:20:03,493

supply out there

771

00:20:03,493 --> 00:20:05,954

so we can actually have a transition,

772

00:20:05,954 --> 00:20:08,540

since the transition now is so small.

773

00:20:08,540 --> 00:20:10,334

I’m a little wary of proposals

774

00:20:10,334 --> 00:20:12,628

to imagine 13 different problems

775

00:20:12,628 --> 00:20:14,213

in advance and solve them.

776

00:20:14,213 --> 00:20:15,339

I think we do better

777

00:20:15,339 --> 00:20:17,716

in the American system of government,

778

00:20:17,716 --> 00:20:20,052

often by waiting till problems come along

779

00:20:20,052 --> 00:20:21,386

when there’s high uncertainty

780

00:20:21,386 --> 00:20:22,971

and then addressing them piecemeal,

781

00:20:22,971 --> 00:20:24,223

whether it’s at federal, state,

782

00:20:24,223 --> 00:20:25,432

local level.

783

00:20:25,432 --> 00:20:26,808

So I’m skeptical

784

00:20:26,808 --> 00:20:27,809

if people who think they know

785

00:20:27,809 --> 00:20:29,478

how everything’s going to go

786

00:20:29,478 --> 00:20:30,771

and they have the magic formula

787

00:20:30,771 --> 00:20:31,855

for setting it right.

788

00:20:32,272 --> 00:20:33,565

I mean, I’m skeptical of that too,

789

00:20:33,565 --> 00:20:35,150

but that is our whole podcast, Tyler.

790

00:20:35,567 --> 00:20:36,193

I know.

791

00:20:36,193 --> 00:20:37,069

I understand.

792

00:20:37,152 --> 00:20:37,444

But the

793

00:20:37,444 --> 00:20:38,403

role of government,

794

00:20:38,403 --> 00:20:39,780

government laws are not

795

00:20:39,780 --> 00:20:41,323

easily repealed, right?

796

00:20:41,698 --> 00:20:43,033

If you pass something,

797

00:20:43,033 --> 00:20:44,868

you tend to stick with it.

798

00:20:44,868 --> 00:20:46,245

So I think we need to be

799

00:20:46,245 --> 00:20:47,454

a little cautious.

800

00:20:47,454 --> 00:20:48,497

Recognize things

801

00:20:48,497 --> 00:20:50,165

definitely will be needed.

802

00:20:50,165 --> 00:20:51,250

The first imperative

803

00:20:51,250 --> 00:20:52,793

will be better and different

804

00:20:52,793 --> 00:20:55,003

national security policies,

805

00:20:55,003 --> 00:20:56,797

which we’ve done some of that already.

806

00:20:56,797 --> 00:20:58,840

But those are tough, complicated issues

807

00:20:58,840 --> 00:21:00,884

no matter what your point of view.

808

00:21:00,884 --> 00:21:02,135

And then over time, well,

809

00:21:02,135 --> 00:21:03,887

a lot of our K-12 schools

810

00:21:03,887 --> 00:21:04,888

are governmental,

811

00:21:04,888 --> 00:21:06,265

so they should change.

812

00:21:06,473 --> 00:21:07,516

But it’s a big mistake

813

00:21:07,516 --> 00:21:08,725

to set out the formula.

814

00:21:08,725 --> 00:21:09,726

What you really want to do

815

00:21:09,726 --> 00:21:11,228

is create an environment

816

00:21:11,228 --> 00:21:12,020

where different states,

817

00:21:12,020 --> 00:21:13,563

counties, cities, experiment.

818

00:21:13,563 --> 00:21:15,524

And over time you figure out

819

00:21:15,524 --> 00:21:16,817

what is best and government

820

00:21:16,817 --> 00:21:17,943

schools should do it that

821

00:21:17,943 --> 00:21:19,361

way rather than all top down.

822

00:21:20,529 --> 00:21:21,738

It feels like that is advice

823

00:21:21,738 --> 00:21:23,198

that could apply to businesses too,

824

00:21:23,198 --> 00:21:24,741

as we try to advise leaders

825

00:21:24,741 --> 00:21:26,034

on how to prepare,

826

00:21:26,034 --> 00:21:27,119

even if this change

827

00:21:27,119 --> 00:21:28,370

comes from the outside in,

828

00:21:28,370 --> 00:21:30,122

even if it comes from those employees

829

00:21:30,122 --> 00:21:31,123

who are doing

830

00:21:31,123 --> 00:21:32,207

everything that you just said.

831

00:21:32,207 --> 00:21:35,711

You know, using an LLM 50 times a day,

832

00:21:36,586 --> 00:21:37,129

so much

833

00:21:37,129 --> 00:21:37,796

so that it becomes

834

00:21:37,796 --> 00:21:40,173

an ingrained thought partner.

835

00:21:40,924 --> 00:21:42,884

How should leaders

836

00:21:42,884 --> 00:21:45,887

think about adapting, adopting

837

00:21:46,221 --> 00:21:47,639

without getting locked in

838

00:21:47,639 --> 00:21:48,765

to something that,

839

00:21:48,765 --> 00:21:49,308

like you said,

840

00:21:49,308 --> 00:21:50,767

might change in six months?

841

00:21:51,393 --> 00:21:53,645

It’s going to change in six months.

842

00:21:53,979 --> 00:21:55,647

I think you have to teach your people

843

00:21:55,647 --> 00:21:57,024

how to stay current.

844

00:21:57,316 --> 00:21:59,067

Which is different from many other things

845

00:21:59,067 --> 00:22:00,402

you need to teach them.

846

00:22:00,402 --> 00:22:02,446

So all you had to teach people Excel.

847

00:22:02,446 --> 00:22:02,904

It’s true.

848

00:22:02,904 --> 00:22:04,823

Excel changes over time,

849

00:22:04,990 --> 00:22:07,242

but it’s been a fixed skill for a while.

850

00:22:07,242 --> 00:22:08,910

Using a smartphone,

851

00:22:08,910 --> 00:22:09,953

at least up until now,

852

00:22:09,953 --> 00:22:11,455

basically a fixed skill.

853

00:22:11,788 --> 00:22:13,415

We now have to teach people something

854

00:22:13,415 --> 00:22:14,624

that’s not a fixed skill.

855

00:22:15,417 --> 00:22:16,793

what about a college grad?

856

00:22:16,960 --> 00:22:18,003

Or, you know, like my son

857

00:22:18,003 --> 00:22:18,754

just started college,

858

00:22:18,754 --> 00:22:20,255

so they’re going to go—

859

00:22:20,255 --> 00:22:22,132

the ones who are leaving or just starting

860

00:22:22,132 --> 00:22:24,217

are in this very liminal space

861

00:22:24,217 --> 00:22:26,219

where the curriculum has not changed,

862

00:22:26,219 --> 00:22:27,012

but they will

863

00:22:27,012 --> 00:22:28,430

potentially come out into

864

00:22:28,430 --> 00:22:29,264

or are coming out

865

00:22:29,264 --> 00:22:31,266

into a changed environment.

866

00:22:31,266 --> 00:22:33,685

Is it enough that they have,

867

00:22:33,685 --> 00:22:35,771

you know, maximum neuroplasticity?

868

00:22:36,271 --> 00:22:37,064

They have to work

869

00:22:37,064 --> 00:22:38,857

much harder on their own,

870

00:22:38,857 --> 00:22:40,692

and there’s a kind of status adjustment

871

00:22:40,692 --> 00:22:42,652

many people will need to make.

872

00:22:42,652 --> 00:22:44,154

So the previous generation,

873

00:22:44,154 --> 00:22:44,738

there’s a sense

874

00:22:44,738 --> 00:22:45,405

if you’re smart,

875

00:22:45,405 --> 00:22:47,032

your parents have some money,

876

00:22:47,032 --> 00:22:48,742

you went to a good high school,

877

00:22:48,742 --> 00:22:49,868

that there’s a path for you.

878

00:22:49,868 --> 00:22:50,827

You can become a lawyer,

879

00:22:50,827 --> 00:22:51,453

go to finance,

880

00:22:51,453 --> 00:22:53,789

do consulting, work at McKinsey,

881

00:22:53,789 --> 00:22:54,831

and you will be

882

00:22:54,831 --> 00:22:56,291

like upper-upper-middle class

883

00:22:56,291 --> 00:22:57,125

or maybe better

884

00:22:57,125 --> 00:22:58,210

if you’re really talented.

885

00:22:59,169 --> 00:23:00,170

And I think that world

886

00:23:00,170 --> 00:23:01,963

is vanishing rapidly.

887

00:23:01,963 --> 00:23:03,548

The notion that these talented

888

00:23:03,548 --> 00:23:04,925

kids might have to forget

889

00:23:04,925 --> 00:23:06,343

about those paths

890

00:23:06,343 --> 00:23:07,052

and do something

891

00:23:07,052 --> 00:23:09,388

like work in the energy sector,

892

00:23:09,388 --> 00:23:11,640

which might even be for more money

893

00:23:11,640 --> 00:23:12,516

and move somewhere

894

00:23:12,516 --> 00:23:14,059

that’s not just

895

00:23:14,059 --> 00:23:16,269

New York or San Francisco,

896

00:23:16,269 --> 00:23:17,521

maybe, you know, move to Houston

897

00:23:17,521 --> 00:23:18,772

or Dallas

898

00:23:18,772 --> 00:23:21,108

or, you know, an energy center

899

00:23:21,108 --> 00:23:22,651

in Louisiana.

900

00:23:22,651 --> 00:23:24,361

It’s going to be a very different world.

901

00:23:24,361 --> 00:23:25,028

And I don’t think

902

00:23:25,028 --> 00:23:28,031

our expectations are ready for that.

903

00:23:28,240 --> 00:23:31,284

So the people who have invested

904

00:23:31,284 --> 00:23:32,285

in the current system

905

00:23:32,285 --> 00:23:34,121

and ways of succeeding,

906

00:23:34,121 --> 00:23:34,663

I think it will

907

00:23:34,663 --> 00:23:35,956

be very difficult for them.

908

00:23:35,956 --> 00:23:36,623

And a lot of these

909

00:23:36,623 --> 00:23:38,041

upper-upper-middle class

910

00:23:38,041 --> 00:23:39,209

parents and kids

911

00:23:39,209 --> 00:23:41,128

will maybe have a harder time

912

00:23:41,128 --> 00:23:41,711

than, say,

913

00:23:41,711 --> 00:23:43,755

maybe immigrants who came to this country

914

00:23:43,755 --> 00:23:45,006

when they’re eight years old,

915

00:23:45,006 --> 00:23:47,008

were not quite sure what to expect,

916

00:23:47,008 --> 00:23:48,176

and will, you know,

917

00:23:48,176 --> 00:23:49,719

grab at what the best options are,

918

00:23:49,719 --> 00:23:50,429

wherever they are.

919

00:23:51,847 --> 00:23:52,389

A lot of a lot of

920

00:23:52,389 --> 00:23:53,682

status adjustment across the board.

921

00:23:53,682 --> 00:23:54,558

It sounds like you’re saying,

922

00:23:54,558 --> 00:23:55,392

you know, from

923

00:23:55,392 --> 00:23:56,518

what your future is

924

00:23:56,685 --> 00:23:57,727

to the work that you do

925

00:23:57,727 --> 00:23:58,687

in an organization

926

00:23:58,687 --> 00:24:00,981

to how and who you manage.

927

00:24:01,356 --> 00:24:02,399

Like in economics,

928

00:24:02,399 --> 00:24:03,733

it used to be if you were very good

929

00:24:03,733 --> 00:24:05,652

at thinking like an economist,

930

00:24:05,652 --> 00:24:06,903

you knew that if you worked hard,

931

00:24:06,903 --> 00:24:07,737

you would do well.

932

00:24:07,946 --> 00:24:08,321

Uh-huh.

933

00:24:08,405 --> 00:24:09,990

I suspect moving forward,

934

00:24:10,323 --> 00:24:11,992

the AI can do that for you,

935

00:24:11,992 --> 00:24:13,368

and you have to be good at managing

936

00:24:13,368 --> 00:24:14,619

and prompting the AI

937

00:24:14,619 --> 00:24:16,413

and setting up research design

938

00:24:16,413 --> 00:24:18,248

in a way the AI can execute.

939

00:24:18,373 --> 00:24:19,791

That’s a very different skill.

940

00:24:19,791 --> 00:24:21,460

It’s not the end of the world,

941

00:24:21,460 --> 00:24:23,420

but when that change happens to you

942

00:24:23,420 --> 00:24:25,255

in the midst of your career plans

943

00:24:25,255 --> 00:24:26,548

it’s very disorienting.

944

00:24:26,798 --> 00:24:27,674

I just want to

945

00:24:27,674 --> 00:24:28,675

put a finer point on

946

00:24:28,675 --> 00:24:30,218

what I hear you saying a lot,

947

00:24:30,218 --> 00:24:30,635

which is

948

00:24:30,635 --> 00:24:32,220

and I think we know this,

949

00:24:32,220 --> 00:24:33,388

that energy,

950

00:24:33,388 --> 00:24:34,806

the energy conversation

951

00:24:34,806 --> 00:24:36,183

is a huge part of the

952

00:24:36,183 --> 00:24:37,559

AI future in a way that

953

00:24:37,559 --> 00:24:39,102

maybe we just have not been quite

954

00:24:39,352 --> 00:24:41,146

explicit enough about.

955

00:24:41,563 --> 00:24:43,064

I think we’ve not been very good

956

00:24:43,064 --> 00:24:43,940

at understanding

957

00:24:43,940 --> 00:24:45,817

it will need to expand a lot.

958

00:24:45,817 --> 00:24:47,694

We’ve made it very hard to build

959

00:24:47,694 --> 00:24:49,946

new energy infrastructure.

960

00:24:49,946 --> 00:24:50,780

I think that will again

961

00:24:50,780 --> 00:24:51,740

change over time,

962

00:24:51,740 --> 00:24:52,824

but probably a slow

963

00:24:52,824 --> 00:24:54,910

and somewhat painful process.

964

00:24:55,202 --> 00:24:56,119

But it’s also a place

965

00:24:56,119 --> 00:24:58,079

a lot of new jobs will be created there.

966

00:24:58,079 --> 00:24:58,663

A lot.

967

00:24:59,164 --> 00:25:00,790

Also biomedical testing.

968

00:25:00,790 --> 00:25:01,708

There’ll be all these new

969

00:25:01,708 --> 00:25:03,418

AI-generated ideas.

970

00:25:03,418 --> 00:25:05,128

Of course, most of them won’t work.

971

00:25:05,128 --> 00:25:06,922

Someone has to test them.

972

00:25:06,922 --> 00:25:08,924

Not just people working in research labs,

973

00:25:08,924 --> 00:25:10,258

but just actual people

974

00:25:10,258 --> 00:25:11,551

in the clinical trials.

975

00:25:11,551 --> 00:25:13,428

So that will be a much larger sector.

976

00:25:13,428 --> 00:25:14,638

That’s what will help us live

977

00:25:14,638 --> 00:25:16,556

to be 96 or 97.

978

00:25:16,556 --> 00:25:16,973

But again,

979

00:25:16,973 --> 00:25:18,099

you have to do the hard work

980

00:25:18,099 --> 00:25:19,017

in the meantime.

981

00:25:19,017 --> 00:25:20,810

And that will stretch on for decades.

982

00:25:20,810 --> 00:25:21,728

So people often

983

00:25:21,728 --> 00:25:23,813

ask me where the new jobs coming

984

00:25:23,813 --> 00:25:25,315

and part, I just don’t know.

985

00:25:25,315 --> 00:25:26,733

But those are two areas where, to me,

986

00:25:26,733 --> 00:25:27,901

it seems obvious

987

00:25:27,901 --> 00:25:29,277

there’ll be a lot of new jobs

988

00:25:29,277 --> 00:25:30,570

and they’ll be super productive.

989

00:25:30,904 --> 00:25:31,321

Right.

990

00:25:31,404 --> 00:25:32,155

And then

991

00:25:32,155 --> 00:25:32,614

I don’t want to

992

00:25:32,614 --> 00:25:33,490

put you on the spot further.

993

00:25:33,490 --> 00:25:34,866

You’re saying we’re early enough

994

00:25:34,866 --> 00:25:37,619