Episode Transcript

The value of code has plummeted because now you can generate code instantaneously for fractions of a cent, but it doesn't mean the value of software has plummeted.

As software becomes more and more accessible to people, the value of software is going to increase because people are going to make more software to solve even more specialized problems.

That's a real opportunity of creating a tool that somebody like my brother can use to actually solve their own problems.

What does it take to do something useful while you have to build some software?

The way that I've been doing that, and I think it works extremely well, is by having the the model build its own tooling.

If you can avoid using agents, you should.

Tools that build tools are the ultimate unlock for productivity, but how do you build them?

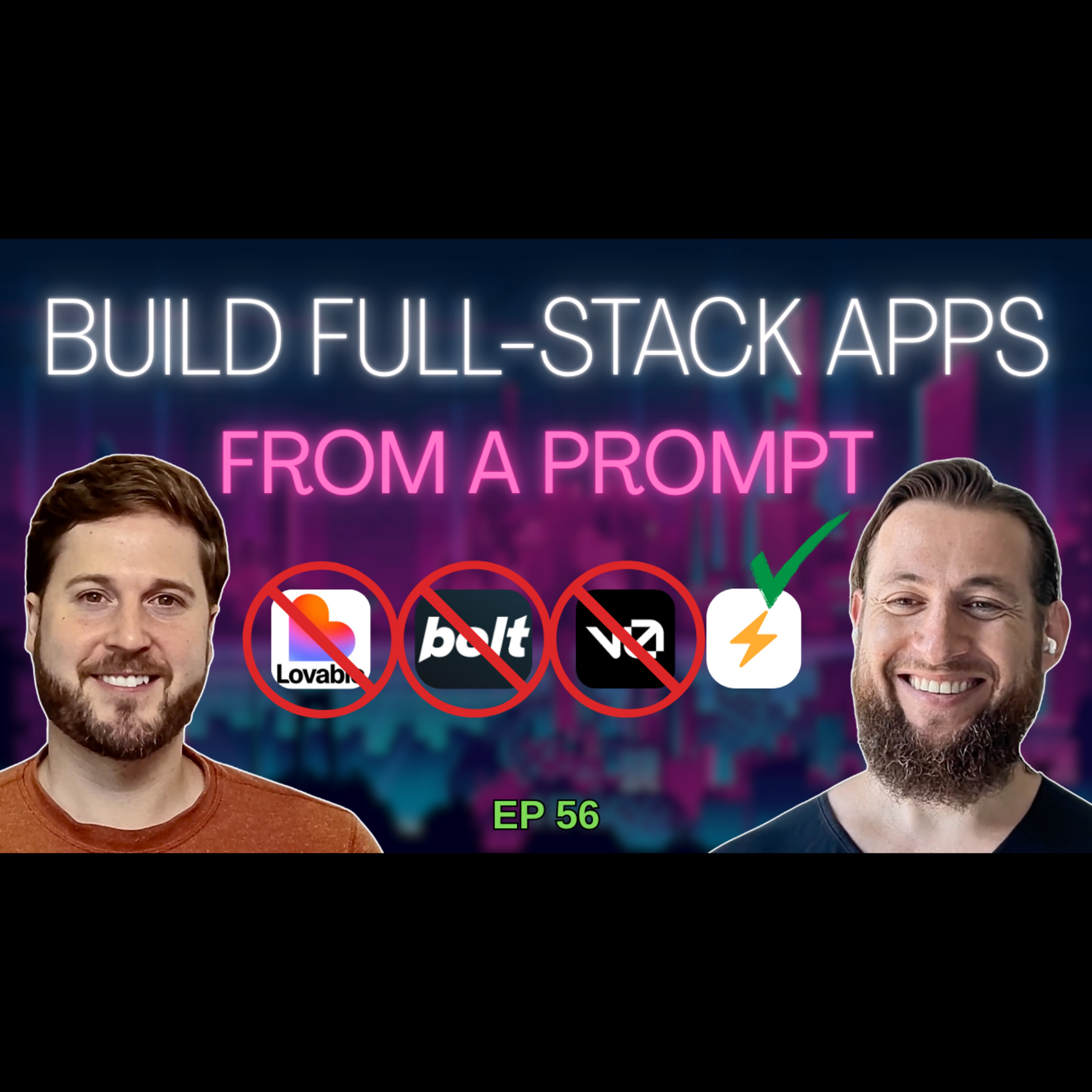

Today we're joined by Richard Abridge, an AI consultant who previously was on tool use back in episode 10.

He's recently built Fastible.

It's a tool that allows you to build full stack apps from a prompt in your browser.

So in episode 56 of Tool Use, brought to you by Tool Hive, we're not only going to learn about Fastible, but how Richard built it, his development workflows, advice on context engineering, and his approach for hallucination mitigation.

I always have a great time talking to Richard.

He's a super smart guy and he built this tool for himself.

So it solves real problems.

And you'll be surprised how his approach seems to make way more sense than what the big names in the space do.

So please enjoy this conversation with Richard A Bridge.

So I've been building Fastible.

So I wrapped up a contract with Microsoft 3 months ago and on that Crunch contracts we built basically this full stack web application, agentic web application that would analyze documentation and find errors.

What really struck me about that experience was like my productivity because that was my first time using Vibe coding tools.

So I used Windsor and I was just blown away at how, how fast I was building and not just me, like the engineers on the team were also like, how are you doing this so quickly?

What's happening?

And, and so that's, that's when I started thinking like, like these are some pretty good engineers I'm working with.

They, they know a thing or two.

Like one of them won the 2nd place at the World AI Fair hackathon in New York City.

And he was telling me that my productivity was like amazing.

And I was like, you know, it wasn't, it wasn't like anything that I was doing in particular.

It was just, I think the process of it was, it was the vibe coding, but not just vibe coding, but it was the process of basically integrating logs like, so I would prompt, you know, Windsor to build some feature and it would, you know, do it's thing.

Well, you know, it wouldn't just be like, build this feature.

There's, there's more to it than that.

But after it was finished, it would say it's done.

And then I would test it and it was broken.

And so I, I quickly found that the easiest way to, to basically get it to fix itself was just to copy and paste logs, like the relevant logs, either from the browser console, if it was front end or from the docker container if it was back end, back into the, into the context.

And I was just amazed at how much time I was spending just doing that.

You know, there was, there was like such brain dead work that I was doing.

I was just copying and pasting logs like I had to know where to look for the logs, but that's what it was.

And so it occurred to me that, you know, this is obviously not something that a human should be doing.

This is something we can automate.

And then I learned about, you know, Lovable.

Lovable is like the fastest company in history to go to 100 million ARR, right?

And I was looking into sort of common sort of complaints, like on Reddit forums about users experiencing with Lovable.

And it was basically exactly what I described, that you would give it some instructions and it would go away and sort of execute on those instructions and come back and tell you that it's done, but it wasn't done.

And then you'd have to sort of do it over and over and over again.

And especially for non textable users, they get really, really frustrated.

And so it just became clear to me that like you can solve this problem fairly easily by just closing the loop and piping the logs directly into the agent.

And so that was really the motivation for building Fastible.

So that's what I've been building for the last three.

Months.

Nice, very cool.

Yeah.

And they gave it a test spin and it is a really cool set up.

Could you talk a little bit about the difference outside of just like the log loop being a full stack app rather than just like a nice GUI that people can put together?

One of the other limitations I guess with with lovable and and clones are that they're, they're basically all built on like pure JavaScript and super base of the database.

And I guess, you know, that leaves out a whole, that leaves a lot of opportunity.

I think like not everybody wants to use super base.

You know, it can be pretty expensive when you start scaling and there's also some other tools that like you don't need to use super base, super base, right, like Postgres for everything is my motto, open source and it does everything.

And so that's that's probably one of the most significant differentiators is is the text stack.

So we're using fast API, which is Python framework and we're actually using the official full stack fast API template, which uses React on the front end.

That's not too dissimilar on the front end from global, but on the back end we're using fast API and for the database we're using doc Postgres and it's, they're all deployed via Docker.

So that's sort of, I think one of the big differentiators is that it's using, I would say like more vanilla text stack then then Lovable and, and Co.

And so everyone who is more familiar with that text stack and you know, is more comfortable building in it and wants to have the advantages of not being locked into Superbase, that's really who festival's for.

That's the differentiating.

Nice.

And one thing I did see also comes right out-of-the-box is e-mail authentication, because anytime you get into the realm of security or off, when you get non-technical or non experienced people vibe coding up at their own project, it's just something you don't consider.

So by including it, you really allow people to kind of skip the risky part and just say, hey, like I can make sure only a subset of people can actually access this or that you authenticate them ahead of time.

Are there any other limitations that you see using Festival versus, you know, of just like opening up cursor or windsurf and just going nuts on a project?

So I think the main limitation, but I, I see it, it's not really a limitation, it's a deliberate design choice is that it's, it's a significant constraint.

So when you're using cursor, you can write anything, right?

You can write Rust, you can write C++.

I mean, your mileage may vary because the training of the models, you know, the data might not, might not be there.

So they might not do as well in those languages.

But the idea with like Cursor and these sort of general purpose tools is that their general purpose.

So you can build anything you want, whereas Fastible is really focused on building full stack web applications now like you don't.

It doesn't have to be full stack like you can, you can just, you know, focus on the back end and just deploy the back end if you want.

But it is constrained to fast API and Postgres and Docker.

So if you don't want to use those things, then you should probably not use fastible in the future.

We might extend that for now.

That's, that's the that's the limitation.

And it's a deliberate design choice for two reasons.

One, it's part of the full stack fast API template, which is, you know, it's a really, really popular project that I've worked with a lot over the years and lots of other people work with.

And it's pretty good product to start to build applications from.

And second, yeah, it uses these languages that large language models really excel at, which is TypeScript and Python.

So if you want to build on Go or REST, you might have more trouble using large language models because they don't have the same amount of training data in those languages.

No, absolutely one thing in terms of the usage.

When you were on episode 50, you mentioned garbage in, garbage out and the importance with context engineering.

As soon as you start opening up these tools to people who aren't as experienced with prompting, there is the risk of when they're interfacing with the chat aspect of it that they do put garbage in because they don't really know what they're asking for.

They may be too abstract.

How does either Fastible help prevent that or mitigate it, or just like assist users to be able to be as natural as they want without having to know their correct terminology?

Yeah, that's a great question.

Right now it does not.

It's all right.

It's still a work of progress.

So the goal, yeah, the goal is to make it available to non-technical users.

So I'll back up.

Actually.

Like part of the reason I started building it was because, again, it was because of my brother.

My brother will start vibe coding his own.

So my brother's an electrophysiologist and he had some new requirements around like billing.

He had to enter in billing codes.

And so he started vibe coding his own web app, which was then accepted into a conference.

You know, I was sort of helping him along sort of along the way if he had any questions.

But it just occurred to me that that's a real opportunity is creating a tool that somebody like my brother can use to actually solve their own problems.

And so that's the motivation I want to make it so that non-technical users can use that.

But so we're not there yet, maybe next, maybe next time.

So the way that's I'm thinking about going about solving that just something that's other people have already started building is essentially like a design assistant.

So before going off and like actually converting the prompt into code, the first thing that you should do and that I do when I'm by coding is converting my prompt into a better prompt.

So using a large language model to like assist me in building this prompt.

And that's actually how I built Fastable as well.

It was actually before I started coding anything, I spent over a week just iterating on a design document and the document said if we can wait too long.

And I had to eventually like pair it down.

But it really helped in basically setting the context for the model.

So that's basically every time I wanted to build it.

You know, you're not gonna do the whole thing in a single context.

You have to create a new context every time.

And so you need to have some sort of shared contacts that you're moving between these different conversations.

And so basically just manually created a Google Doc by prompting a large language model.

The goal is to make is to build that into Fastible so that before you start actually coding, the agent is going to help you through creating the design.

And ultimately the goal is that, you know, we don't even have to be aware of what's happening in the background.

I don't know if that's going to be possible.

And maybe I shouldn't want that to be possible because then we're all out of a job.

But that's that's the goal.

Excellent.

Yeah.

And my my day job at Box 1 doing the AI Academy, I've been trying to teach everyone how to build their own tools using cursor clod code.

And one of the issues we've run into is just the fact that non-technical people with Infinity opportunity through these things, I can steer like they can go off the rails.

It can do things that are unintended, like had unintended side effects.

So by being able to put these guardrails on and say here is going to be a well structured web app that you can deploy automatically sync up to your GitHub.

I feel like that's really going to alleviate a lot of the issues where allowing people to have like the Wild West of AI tooling.

Do you envision Fastible mostly being in the zero to 1 stage of the development life cycle?

Or is it something that if I have an existing project, whether I built it on Fastible or otherwise, I can iterate, refactor and try to build upon it?

Yeah.

Thank you for asking that question.

So one of the features that I just deployed was Biscuit project import feature.

So if you already have a project, and again, this is this is for my brother who I've coded his app, but who wants to use Fastible.

So now if you already have a project, whether it's vibe code or not, you can just specify the GitHub URL and Fastible will clone that, the GitHub repository and then convert whatever it is into the Fastible stack so that you can have sort of whatever it is that you have.

Even if it's like something in a completely different language, you can convert that into into the same stack.

Zero to, I guess zero to 1.

It depends how you define one.

So Lovable defines 1 as like an MVP, right?

So where it's not even, it's more like a almost a mock up where it's just basically like the, there's sort of like AUI and maybe some basic back end functionality.

But most people are finding that they have to move off of Lovable to actually bring it to production.

So the goal with Fastible was to remove that sort of limitation so that users can just stay in the platform and build the full the full application there, actually deploy it and everything.

We don't have deploy like we have the the live staging environment where you can share that with your friends.

But that's sort of like on our hosting environment.

Eventually we're going to be building it so that it actually helps you deploy into your own hosting environment as well.

But still A to do.

But yeah, the goal is to is to make it so that you can build the full application from start to finish in Fastible.

Very cool.

I did see that it's bring your own API key.

So it's nice where I don't have to worry about paying a monthly SAS subscription right now.

I can just kind of control my usage.

Do you have a general estimate?

I know with like projects, there's such a wide range, but how much will it cost someone to do something from like a basic to do app to something a little more complex?

So we're looking at like dollars, 10s, hundreds, thousands.

How much can they expect to spend on API costs?

Yeah, I'd say for A to do that maybe around $10, you know, depending on how, how, how complex your to do app is, like just add it like relatively simple stuff, it's like $0.10.

Like adding like some animated background is like $0.10.

And so yeah, you might have to do that a few times to make a dodo app.

So you can get to like a dollar to $10.00 for a basic app.

Yeah.

It really depends what you're building though.

Nice, and you did mention the pre prompt, the prompt optimization.

You can chat UBT.

Are there any other tools that you think people are either using or should explore that would help augment and supplement using fastball?

Well, like I said, I mean, I, I want fastible to be sort of the platform where you can build like I'm building this for myself really.

Like I've done a lot of full stack applications as part of my job.

And so I'm really building it for myself to make me go faster.

And one of that's why one of the things I want to build is this sort of design iteration tool, so I don't have to do it with Google Docs and ChatGPT.

But yeah, until that's there, you can always go to ChatGPT or any other model.

And just that's why there's a copy button in the agent's chat window.

So you can just easily copy the full agent context and paste it into another model.

To give you sort of a second opinion, there are some tools that I've come across just recently in the last few days I just saw on Twitter.

I haven't actually had a chance to reuse them yet though.

But there, there is at least one tool that's basically what I'm describing.

That's it's just basically like a design assistant where you type in like, oh, I want to build this thing and it sort of leads you through sort of engineering design.

At the end you get sort of a design document.

I'll I'll see if I can dig up that name for you and send it to you afterwards.

Perfect.

Yeah, we'll include it down below.

Another, another big difference between fastible and lovable is the fact that it doesn't try to hide the complexity from you.

So lovable, I think it's really intended for non-technical users.

And as a technical user like that was I found out a limitation and so did other folks that I that I spoke to about it.

So with Fastible, the goal is that you shouldn't ever have to look at code if you don't want to, but you can't if you do.

And so we have like a full, there's a full editor in there, like a file browser.

You can view the diffs and you can accept the reject diffs by file if you want.

You don't have to.

You can just do it by the cowboy style and just not look at anything.

But if you want to, it's there.

And I think that's useful for technical people who might have an opinion about how something should be implemented.

Or more importantly, the reason I built it was because sometimes the model will just screw up something very, very minor and you don't want to have to like spend let's say like $0.10 or weights, you know, a minute for it to fix it.

You just want to press like backspace and fix it yourself.

So that's what that's there for.

I think that's the other key differentiator.

Nice.

And one thing I do like about the deploy to GitHub aspect is on a lot of my projects, I've actually installed either Mentat or Claude code in in the GitHub action.

So if there's APR pushed up, it'll actually review it, give some feedback.

I can kind of guide a little that way.

So actually curious what what models or model or models are powering Festival?

Yeah, right now it's just cloud code.

Nice.

And the reason is because when I started building it, that was the one with the best API.

I, I looked at a few other ones like Open Hands was the first one because I wanted to make it open source initially, but I had some trouble integrating Open Hands and there were a few other ones.

But then I looked at the Cloud Code API and it was clearly like the most advanced and well documented.

So that's the one that's enough going with.

But now since then, there's been open AI codecs has been released and as well there's, I think Gemini has something I don't know, but I haven't looked at the APIs though for those ones.

But the goal is to integrate those as well.

So you can sort of pick which pick and choose you know what model provider you want.

Very cool.

We actually had Graham new big from open hand on here back in the day.

So we got a little message get him to step it up.

Yeah, yeah, it's really cool.

Actually just this past week I've I've took the switch to codecs to give it a try because I heard a lot of good things.

One thing I dislike about it is if you ask it to do corrections or explain the justification for the code, it suggests it will rewrite the code and you have to accept or decline it before it'll give you the explanation.

And I really like with cloud code, it'll do like like conversation first, code second.

But opening I sent to flip that just a small little user thing.

But when I upgraded, I think I was on 0.9 and then it got up to 0.28.

So they're, they're shipping fast, they're making big improvements.

So yeah, I definitely hope more people try it out just to see like you got to explore these different tools and see, see what works with you.

Yeah, exactly.

I think another big differentiator in terms of the models is the clock code is like probably the most expensive one out there.

So yeah, we've already had some comments from users that like, oh, this is this is adding up.

So that's that's one of the reasons why I want to switch or at least add the option to switch.

Yeah, having the the toggle bowl or there's like a drop down list tends to be helpful, but it's tough when you're when you're looking for certain results.

It's really tough to beat the state-of-the-art.

We just get spoiled with it.

We get used to being like, oh, you know, 4.1 Opus can output this, this is what I want.

Turn on Max mode and cursor and you know, deal with the cost.

Yeah, especially because really the most expensive thing is your time, so.

Save time and if you compare it to the salary of another engineer or outsourcing to a consultant, if you're able to work through it, it is a massive reduction.

Yeah, yeah, when you log in, you'll see the screen where you can create a project.

So you can create a new project here.

You can just describe whatever you want it to be, like my social media app or something like that.

And if you have an existing project, here is where you can paste it, get a repo and you can import it.

All right, so once you've created an application, this is sort of the screen that you'll see.

This will take a couple of minutes to load up.

One of the things on my very long To Do List is to speed this up.

But while it's going, let's describe quickly what's happening.

Basically, there's there's a bunch of containers that need to be speed up in the background, like the back end container, the database.

And as they spin up, you'll start to see them here.

So here's where you see like the Postgres of the database and you have like the full the full logs here.

Here's where you get browser logs, which is this is pretty powerful.

And it took a little bit of hacking to make this work.

But basically what you're seeing here is actually once it's loaded anyways, this is an iframe.

So it's not, it's actually loading it from a separate website, your website, it's creating a new website just for this project.

And that website is gonna be loaded here.

And the logs for this website for that website is what you're seeing here.

So on the browser, there's nothing happening cuz it hasn't booted up yet.

But you can see the front end.

It's like installing dependencies.

The back end as well.

It's faster after the first time cuz it doesn't have to install everything from scratch while it's going.

I can also show you this stuff here.

So here's where you see like this is sort of like the power user features here.

If you, if you really care about code, you can come look at it here.

I'll open up the login route so we can have something to look at real easily.

And so you can see there's like a nice syntax highlighting here, right?

So this is this is now your website and you can see like it already has this login form.

And so given these credentials here, these credentials are generated automatically for you.

If you want to log in, you can log in.

And here's here's sort of like your application.

You can see there's not much here yet.

This is just the the standard, the default fast API template.

And you can see also there's these access URLs.

So if I go here, for example, you'll see that it's actually loading up like this.

This is the website that you see here and, and it's it's being hosted in this domain name here.

And yeah.

So if we go to like, let me log out here and go back to that, that login route.

Give a demo here of how this works.

So I can, I can, I can just edit and it's live reloading.

So I'll just say like hello, you can see immediately it shows up there.

And so you have your hello up here and then you have like your syntax highlighting and you also see this diff here.

So if I, if I open this, you can see that it shows me the diff and I can also just go back and edit it.

All right, so that's that's sort of like the power user feature.

But if you want, you can just like hide that all together and not pay attention to it.

And you can just say like add a giant emoji to the login page and this will just take a second.

But on the background, it's starting up like a cloud code context and Cloud code's running inside of the container that's running the application and actually modifying the the live code.

There you go, added a giant emoji.

And so if I go, I can open up this guy here, you'll see that the should be.

There you go.

Here's the depth of what it added.

And so now if I want to, I can, I can just start it or I can commit it.

So if I commit it, you'll see it'll generate a commit message for me.

Add rocket emoji and hello text to login page.

And I can commit that.

And there you go, it's committed.

So there's no state changes.

And now if I want, I can go to my project and I can say, push to GitHub.

That's new.

My demo.

Yeah, exactly.

Yeah, but normally this works and and you can can, you know, export your project to GitHub and and do what you will with it.

And I did really show the, the value of the of the logs here.

But one thing I I wanna demo just real quick is let's say in the login page, I want to say console dot log hello world.

And you can see it's it's it's grabbing the logs there.

And so this is useful because if something was broken, the, the console would throw an error.

And then I can just copy that and paste it back into, into the agent.

And so this is basically closing the loop for, for the agents to, to ground it in the actual environment.

And then, as I mentioned, you have this sort of copy feature here.

You can copy just the, the, the top level messages, or you can also expand.

And there's much more details in here.

You can see for each message and also get to the cost, which is something that's I think for some reason most of the not for some reason I think to encourage usage.

Most of the provide like the the other tools, they sort of hide this cost, which I find sort of some optimal.

I'd like to know how much I'm spending.

So there you go.

It costs $0.15 to add this emoji using cloud code.

Yeah, the transparency is always helpful just to get a better understanding of what you're actually doing.

And yeah, I agree.

Yeah.

And then you can also see, you know, let's give an example of like an error.

If I just say food here, we should get an error.

There you go.

Something is not defined, Food is not defined.

And so if I just copy this and then I can create a new chat because this this chat, let's say it's not relevant.

So use a fresh context and I can just paste it in and hopefully it'll pick it up if there's an error and try to fix it.

There you go.

Can see there's a reference error, can see the issue unto my variable Foo.

Well, you can see it remove Foo.

But I still haven't given it the the tool to re to restart the environment.

So I think if I refresh it, will that fix it?

Yeah, There you go.

Yeah, I have to give it.

I still have to give it that tool to to do the refresh.

That's not implemented yet.

But there we go.

There's a quick demonstration of Festival.

Awesome.

Thank you so much for showing us.

My pleasure.

One thing you mentioned was that the idea of making this big project design dock to kind of guide things and get started.

Do you do any other like either markdown files or like project docs that go out throughout your project, for example, like a scratch pad or To Do List?

Do you have any other assets that help guide the LM throughout multiple conversations?

Yeah, so that's actually something I didn't mention about one of the differentiators as well.

You know, while building this, I built it on the same stack.

So you know, it uses the full stack fast API templates to deploy user applications, but it's actually also built on the full stack API template.

And that's a template that I've been using for years now.

And so I'm pretty comfortable with it and I'm also pretty comfortable with prompting models for working with it.

And so as I've been going, I've been creating this big long system prompt that actually still has not been integrated, but it will be very shortly to to basically help the model work with the existing templates.

It was best practices, you know, like after modifying the back end, you should run the the open API script to automatically generate the front end, for example, rather than trying to implement a front end yourself, like to prompt the model to implement the front end yourself.

So that's, that's one of the pieces of text.

I think that's going to make a big difference.

And then for myself, I also just have like A to do to do file that's I just add to and that's just something that I look at myself and that's not something that I use with the models.

It's just for me to keep track in like a really quick way.

I mean, I like using GitHub and you know, something more professional if I'm working with a team, but this one I'm just hacking on my own so far and just minimum overhead of the text files.

So I just have A to do text file and then of course there's a read me.

So I think that's really important both for users and for the model.

And so as as I'm going like, especially when it comes to stuff like deployments, you know, you want that to be rock solid.

And so I always put into a read me.

So it's very, very clear, like what's the right way to do this?

Yeah, I've gone to the.

Habit where I've I've back on team mono repo used to do a lot more micro services and try to separate some concerns, but now just having everything in the same repository just makes it that much easier to have AI make the connections.

And I do a read me for each individual app as well as an entire project, one with more like deployment best practices things and always try to frame it with the the LLM pretend that a new developer is going to come on tomorrow.

Do they have all the information they need?

And then just let it update it each time.

Also with the caveat that like I want to prune update information because it's so easy at a pending a pending and all of a sudden you get a jarbled file that really doesn't help guide because it stands so many directions.

So it is an iterative process.

But I like the idea of a manual To Do List.

That's your document that you know is accurate.

Yeah, it actually.

Reminds me that I do have additional read me's throughout the throughout the project, like for for very complex or like more complex features, I'll have the model actually generates an MD file that describes how that feature works so that if the model in the future needs to understand more about it so it can just read the the MD file.

You don't want to smash that all into the readme because I think it's too long like you said.

So it's all about that context, context engineering.

Yep, it's it's always.

Comes down to that.

One thing you mentioned is that you use the the Fast API to build Fastible.

Is Fastible capable of building another fastible?

Like could it be a self replicating thing?

Yeah it's a great question.

I have to.

Think about that one actually.

So I think ultimately the goal is for that to be the case.

But I think right now there, there's probably some limitations around restarts, like starting and restarting environments.

So like sometimes, for example, you know, the docker will run out of memory or something like that and you have to like just kill the demon and restart it.

Like, yeah, as I was hacking on this, I was like creating new projects because I was testing it and you know, I wasn't like, I hadn't implemented like the, the, the project clean up logic, right.

And so eventually, like there was no, there was no more room left on the image and I had to like purge my, my docker system resources.

So that's something that's like could like theoretically be built in into festival so that it's aware of that.

But you know, I haven't done that yet.

And there's a whole lot, a whole list of things that I have to sort of more high priority than that.

Eventually I think Fastible will be able to build Fastible, but not yet nice.

Exciting times when we get there.

Just on that note with the with the docker, do you know of any tools or systems workflows for the dev OPS SRE level stuff?

Because most of my interaction with LMS is either on the coding side or just a general like Q&A, but I've yet to find a system where it assists me with deployment other than copy the logs, get advice, and then I manually run commands.

Do you know anything that helps with that aspect?

Yeah.

So the way that I've been doing that, I think it worked extremely well, is by having the model build its own deployments tooling.

And so that's how I deploy Fastmo right now.

It's just a deploy dot PY file.

Actually, I built something similar for the Microsoft Setup Mark repo as well as the Microsoft Omniparcel repo.

It's just a deploy dot PY that has like a bunch of functions.

It's a good command line utility that you can call to like first provision a server and then deploy the software to the server and then like monitor logs or restart it like whatever you would normally do.

You have the model build its own utilities for doing that and then it can easily interact with the server or create a new one if it needs to because it has this tool that I found has been very, very powerful.

That's super cool and it kind.

Of goes along with a a a bigger encouragement for people to explore docker because then you can have the docker file and make sure that you have the exact same environment set up every single time.

I actually really like that.

I hadn't considered that.

Are there any other programmatic ways of enhancing the developer experience so you can leverage LLMS in like non coding ways?

Well, the one thing that comes to mind actually for me is, you know, it's, it's basically, yeah, non coding ways.

So it's like as technical people, we're always very, very focused on the tech, right.

But you know, when you're building, when you want to build a business, the technology is, is generally not the limiting factor.

It's it's it's users and distribution.

And like, you know, understanding customer problems is like a very orthogonal, just skill sets, technical stuff.

But luckily, you know, large language models have like a very broad skill sets and so they're actually very helpful at that as well.

So when I was building fastible, I use, you know, I think it was Chachi PT deep research as well as Gemini deep research to do like a comprehensive review of like the available tools that are similar, like not only lovable, but like base 44 and the other ones.

And to really narrow in on what I could do that would be different.

And that was really, really helpful because otherwise I would have had to do all this research manually, right?

And this way it was, it was done for me very, very quickly.

So basically I think it's useful for anything.

And my, my, my next step is is going to be for the market, the distribution.

That's something I've never, I've never really cracked.

And so like I'm a complete novice at that.

And so I'm excited to start my my chat GPG education on on distribution.

Excellent.

Yeah.

It's and it's, it's like you said, it's a tough, tough egg to crack because you can't rely on the LM to do much like marketing copy generation, because it's clearly AI slop and it doesn't sound that good.

But there's ways you can leverage like automating e-mail blasts as as an example.

Like there are ways that you can assist with it, but having the the strategic discussion with a frontier model will probably help a lot.

One thing I want to pull back to with festival is you mentioned with loveable.

Sometimes it'll hallucinate whether it's like packages, libraries kind of make things up or use outdated.

One thing I noticed is it always, the LMS always tend to default to Beautiful Soup and there's so many better web scrapers.

How do you, from the back end, either manage the prompts or help with hallucination mitigation and try to keep it so the code that's generated is using real libraries that are the correct libraries?

Like is there any validation there?

Yeah.

So it's similar to what we were talking about before with doing the research.

So basically when I'm building a feature, what I'll do is I'll prompt the model to first do research on like available libraries and tools that can assist in building this feature.

And then, you know, it'll, it'll give me like some list and then I'll ask it to like, I can do it myself, I can look at it, but it's taken to just OK, I'll pick the best one and look up the documentation and then produce a report on how to use this library for what it is that we want to do.

And this way again, it's grounded in the, in the actual library's documentation.

So you can't rely on the models parameters to give you, you know, useful results here.

You have to have it grounded in the actual library.

And luckily, you know, models have access to tools, so they can, you know, do a curl request for example on the documentation.

And then that way it's grounded in in what library actually does, as opposed to what it thinks it does.

Nice.

And do you have some type of storage or caching in case multiple users want to leverage the same feature so you don't have to like curl the same docs every single time?

Yeah, that's a.

Really good.

That's a good point.

So that's something I've been thinking about.

That's a tricky 1 though, because now like as soon as you start doing that, then you know, users might I can imagine users getting kind of miffed because there there was all sorts of controversy around you know, I could have Co pilots reproducing users code, right.

So this is kind of a similar thing where like I'm going to have one user build some feature and then I'm gonna like cache that feature so that other users can use it.

You know, that first user might, may not be too happy about that.

So I think there's a way to do this probably involves sort of like a manually curated set of components.

And that's what I was thinking about doing for it cuz right now we, it just uses the e-mail login that comes with the fast API template.

But I know a lot of people want like Google login, for example, you know, I've built Google login before on this template.

It's easy to do, but like, why do it over and over?

So the user just wants Google login.

You can just be like, I want Google login.

It should be able to just pull it in without having to build it up again from scratch, right?

But then you have the problem of invalidation.

Like at what point does that API become invalid and you have to update it.

So it's it's actually not such a straightforward problem, I think.

No, that's that's a very.

Good point.

On the note of having the LLM curl docs generate the report, a lot of people would describe it as a multi agent setup.

Would you define it that way or is it just like a series of APILMAPI calls that you control the context for?

How would you define the the architecture in terms of how AI is implemented in Fastible?

Yeah.

So that's a good question.

I always default when it comes to agents to anthropics definition.

I really like this idea of, you know, an agents is basically piece of software that basically decides what it's going to do next.

So it's, it's the model itself that's deciding what, what to do next.

So you basically have like this wild true loop and then you just ask the model, what do you want to do next?

And that's an agent.

So you're basically giving an agency it can control what it's doing next.

But the problem with that is that the risk compounds, you know, language models hallucinate, they have all sorts of limitations.

And so if you're just letting it go off on it's own, it's going to very quickly go off the rails.

So if you can avoid using agents, you should because it depends what your goal is.

If your goal is just to tinker with agents, then sure, you can tinker with agents, but your goal is to make working software, you know, or that rather, let me back up.

Your goal should never be to make software.

Your goal should be to make something useful.

And then what does it take to to do something useful while you have to build some software and you don't, you want to build as little software as possible for it to be useful.

You'll want to like go off and build software for the sake of software, which I think agents are really good at.

So, you know, you tell them to build software, they're going to build that software, a whole lot of it.

It's not necessarily going to be helpful though.

I think if you can get away with just an API call, you should and and traffic defines us as work flows.

And I think that's a good, that's a good term.

So you basically as an engineer, you know, what's what the what the proper sort of procedure is for a certain type of user experience.

So you can encode that into the software.

But I guess this comes back a little bit to the better lesson.

So the better lesson is that like, you know, this, it was Rich Sutton, I believe we coined this term.

And the idea is that, you know, AI researchers want to encode their knowledge into AI to make the AI better.

And while that does improve the AIA little bit in the short term, in the long term, it gets beaten out by just throwing more data and more parameters, more compute at it.

That's sort of the, the, the tricky situation we find ourselves in that's if you're going to use agents, you might have a more like you're basically your software is going to be more flexible in the long run.

And so it is very, a very fine line to, to draw, I think.

And it I, I'm still not, I'm not 100% convinced about, you know, I don't think there is any sort of like hard and fast rule.

I think it comes on a case by case basis.

What's the right approach?

So right now everything in fastible is very much a workflow except the Asian chat itself.

So like there's we're using like large language models calls behind the scenes.

So for example, create commit messages, that's not an agent that's doing that because like I know that I need to create a commit message.

So I'm just going to prompt a while to create a commit.

The only thing that's the the agent is is the is the user chat when the user's trying to describe the feature that they want to build.

And then that's the agent in the behind the scenes that's going in, you know, building the software so that we give it access to some tool calls.

Like, you know, you can read the existing files, you can write files, you can restart the environment.

And then we give it like some budget, like you can go for 10 steps and then, you know, do whatever you're going to do in those ten steps.

You're going to read some files, you're going to write some files.

I don't know, just go ahead.

But I think it is important to you have to cap it because otherwise it can go on for like forever.

And then you're going to have like this massive bill and you're going to have a whole bunch of useless software.

We, we give in festival, we give the user an option.

Like we can say you can go for like 10 steps, 1030 steps, 100 steps or some custom number of steps.

Very cool.

One thing since you brought the better lesson that I am internally like conflicted about is creating tools for the LMS because you get way more success.

Like the the likelihood of a tool called resulting in a successful output is much higher than having the LM just try to generate the output by itself.

But by creating tools, we as the developers are kind of guiding it, directing it, imparting our knowledge.

What for you is the threshold to develop a tool versus letting the LLM figure out the problem on its own?

Yeah, I, I think.

Probably the.

It's a similar rule that's used for refactoring, I would say.

So if you're doing a thing once, you should just do that thing once and then don't think about it again.

If you're doing it twice, make a note of it.

Make a note the hop.

I did this twice, maybe I should think about not doing this again.

And then when you come around to doing it the third time, I mentioned refactory.

Now you should probably refactor it into like a reusable component, maybe not all the time, but I think a similar thing is with with tools.

So if you find that it's something that you're you're you want your model to be doing on some like regular cadence, then you probably want to package that up into a tool.

Similarly, if it's like a very complex thing that you can break down into a series of steps, like you don't want to use models for everything because they're expensive and they're not deterministic.

You want to use them just as little as possible, actually, just where they're needed.

And so then you want to sort of create a tool that's deterministic and give that to the model so they can call it when it wants to.

Yeah, no.

And I fully agree as little LM as possible in the work flows, fuzzy input to something structured or allowing the output to be customized, but through the middle, as much code as possible just so you know exactly what's going to happen.

Other advice that I'd love to hear from you is you, you were talking about context engineering on a past episode, but specifically for building full stack apps.

It feels like there's so much variability in what people could build, but also because you put guard rails on it.

Do you have any advice for people who say they're they're prompting to make their own full stack app?

What type of context engineering should take into account?

Like you mentioned the documentation, but is there anything else that's relevant?

Well, so I mentioned.

Like finding like the the target market, like so I had when I was doing this, when I was building fastball, I had two documents.

One was the architecture document, which describes the functionality and like how the different pieces should fit together.

And the other one was the what I call the economics document, which was like, who is using this and why do they care?

And I think that was really helpful because certain, like I think it came down to prioritization.

There was all sorts of stuff I wanted to build and like, I still want to build, but you know, this is me sort of like doing my, my engineering thing and users don't necessarily care about these things.

So I, I thought it was very helpful to be ruthlessly like pragmatic and cutting scope.

So I would, I would have like this, this document would describe all this functionality.

And then I'd have this document describing the users and what do they care about.

And then I could use that to both of them together to sort of figure out what should, what should I build next.

So to use that to prioritize the development as you build your own.

Apps to solve your own work flows.

You're going to want to power the real data using MCP, and that can be a little bit scary, but that's why I've been testing out Tool Hive.

Tool Hive makes it simple and secure to use MCP.

It includes a registry of trust MCP servers and lets me containerize any server with one command I can install in the client in seconds and secret protection and network isolation are built in.

You can try Tool Hive too.

It's free and it's open source and learn more at Tool Hive dot dev.

Now back to the conversation with Richard.

Since Fast World develops assets that people ideally deploy to the web and actually use, how much security concern do you have to manage versus how much is on the responsibility of the user?

Are there things built in to help prevent security risks, or is it one of those things people should talk to a ChatGPT or Claude to get a better understanding so they can put these garters on before it's deployed to that could cause actual harm?

Yeah, that's a really good question.

So there's a couple of places where we're handling that.

One is in usage of secret, like Castable itself has a bunch of secrets, like for example, your API key that you're putting in there.

So to mitigate any security risk there, we're actually not storing them, we store them in GitHub.

So what's actually happening on the back end is we're creating private repos for every product that's created.

And then we're just using the GitHub repository to store all the secrets.

And that way, I mean, there's a big dependence on GitHub, but that way, you know, we're sort of relying on Microsoft and they're like armies of engineers to to make sure that's your secrets are safe.

When it comes to the agent itself and what it's building, This is still a work in progress, but we're we're using a similar, I guess, an approach that the full stack fast API template takes, which is just a dot N file.

So the in the Git ignore you have like it's we're basically explicitly telling gets to ignore this particular file, this dot end file.

And the dot end file contains all the secrets.

And this is part of the the system prompt that I mentioned earlier so that the model knows that, hey, if I need to create secrets, they should be stored here.

And you know, that dot end file gets populated by by the application at runtime.

I'm sorry, the the environment variables get populated via the dot end file at runtime.

Yeah, you're not ever committing any secrets to get.

Now that's not obviously like a hard and fast, like it's still possible that if you tell, if you paste a secret into the chat and you explicitly give it instructions like, hey, put this on the front page.

Well, it's going to do that.

So there's no, there's it's hard to mitigate against that.

There, there there is.

Perhaps like I have some ideas around that, like for example, just filtering everything that goes through some level of application.

You can just do some search and replace for for for secret names.

So anything that ends with like API key or something as some sort of fall back.

But even that's not foolproof, right?

So I think at the end of the day, somebody's going to do, somebody really wants to do something that's insecure, like we're going to let them, we're not going to prevent them.

And there's no there's no way to do it actually.

Yeah, and and similar.

Most leaks, most vulnerability say are it's the human's fault we're we're the weakest link in the whole security apparatus.

The fact that by default these GitHub repos are private allows it to be contained at least for someone to audit.

If you have one of those GitHub action bots running to kind of scan your code, there's another layer of of security people can add.

There's always risk when you start putting stuff up there and the best thing we can do is try to educate people and put some degree of guard rails on.

But if they just vibe code their own thing anyway, it's going up on the Internet.

So at least this way you have a bit of control over what we can prevent.

Just a few general thoughts.

Back in the day I worked for a no code app company and now with all of these tools like Fastible and other prompting to app output, do you see no code as as reaching it's end or do you still see a place for it?

Yeah, it's interesting.

I was talking.

To a friend of mine who is a big proponent of bubble.

So if bubble is this sort of no code application builder and you know, a lot of people built like entire dev shops and in careers on, on building on bubble.

So I, I've got the, I guess that I think they probably have their place.

I think I was asking him why he likes it so much and he was saying he really likes the idea of just being able to point and click.

So like, I want a widget here and you can just click here and you get a widget here as opposed to like you have to describe to the model like, oh, I put it like on the left of this other.

So, but I think there's a, this is another feature I want to build into Fastible.

It's basically just you can just you can build the same thing where you just click on wherever you want a thing and then prompt like, oh, I want a thing here.

And so that shouldn't be too hard to build.

It's not implemented yet.

So I think that eventually these things are going to converge.

I think.

I think Bubble already has some sort of AI tools they're building and, you know, it's just the first level, right?

They're going to continue expanding on it.

And you know, these AI builders like Fastball, we're going to build in these no code features like point and click.

So I think they're going to probably converge.

Yeah, Yeah, the ability.

To have, whether it's a coordinate system or just a way to capture where the user clicks and then feed that through, I think that'll work once it's implemented.

So one thing that tools like Fastible enables is this idea of disposable software where you get an idea, you spin up something really fast, you try it out, if it doesn't work, whatever, you can try again, throw it away.

You lost, you know, a couple dollars, a little bit of time.

But ultimately the idea that software doesn't have to be as have the same longevity as it used to because there's a lot less sunken cost.

What are your thoughts on that as a general trend?

Do you think it's a good thing, a bad thing?

And where?

Where are we going with disposable software?

Yeah.

Andrew Ng gave a talk on this recently, the Y Commodator Startup school that they liked.

People were saying what he was saying, that's people are saying that's now the time to stop training people to write software because the value of software has has plummeted.

And he said it's true that the value of code has plummeted because now you can generate code, you know, instantaneously for like fractions of a cent, but it doesn't mean the value of software has plummeted.

And he, he actually thinks that it's the opposite.

He thinks that now as software becomes more and more accessible to people, the value of software is going to increase because people are going to make more software to basically solve like even more specialized problems.

So I mean, it's impossible to tell the future.

And obviously I'm biased because like I like building software and I don't want to go away.

So but I sorry, I like what Andrew and entering is biased too, because you can create course 0.

Course 0 is all about learning how to code, right.

So yeah, it's really, it's really hard to predict the future, but at least for the for now, until you know, AGI is, is here, which I don't think it necessarily is yet.

I don't think there's, I don't think that the value of software is going away.

I think, I think we're, I think we're safe for a little while.

I agree and I think.

There's there's a couple other principles that I think we'll tie into this too.

I'm a big proponent of Obsidian, the whole file over app principle because if you can control your data and keep your information on your computer and just develop different types of software to interface with that data, it doesn't matter.

Like I'll use Obsidian for my note taking, but I had someone who didn't like the way the tasks were able to be prioritized and want some a little more automated.

Instead of making a plug in, they just spun up an entire app where it read the same vault, took in the markdown files, look for the the dash in the square brackets that designate a task and then just brought them all in.

And then a nice draggable interface that you could add tags to to prioritize just a a a new wrapper around the data.

So I think people just need to get into the habit of start finding ways to make your data portable because the way that you interface with it is going to be, you know, whatever you want it to be soon.

Interesting.

Yeah, I, I, I could see that being a conflict with the with like a bunch of big companies that, you know, their entire business model is around like having your data and giving you access to it.

So that might be, so there might be some friction there.

Yeah, do do you?

Think SAS is going to be affected by products like festival so I I heard.

Already that's it.

It already is being affected because yeah, it is so, well, I, I, I've heard a couple of different, different claims about this.

So 1 is that it's already being affected because it's becoming so, so simple to spit up new SAS, new software products.

And so like, there's like an people are claiming that there will be an inundation of people would be sort of overly saturated with different products.

But then there was this report that was released recently with suggested the opposite.

And if somebody had done some empirical analysis showing, I can't remember which data sources, but they basically suggested that, you know, despite the fact that the value or the, the, the energy required to create software has decreased so much, the actual amount of software being produced is not changing that much.

It's still sort of on the same trend as it was before LOMS came on the scene.

I think it's still too early to tell.

Yeah.

It's.

People can't predict the future and now it's it's harder than ever.

Richard, this was a blast.

I'm I'm pumped on fastball.

I hope more people try it out Before that you go.

Is there anything else you want the audience to know?

Just check it out.

It's free to use other than the API key.

So go to fastball dot dev and you need to GitHub account.

For now I'm going to be adding in Google login so you won't need that and just give it a shot.

Thank you for listening to this.

Conversation with Richard A bridge.

I'm so glad he came back.

I always have a blast talking to him and he always provides some insight that I just didn't really consider.

If you're looking to build your own app, I suggest you check out festival.

It's free with your API key so you're only paying for the usage.

And this way you can start getting into the habit of building disposable software, software that's made for your specific use case at the specific point in time.

Maybe you'll change in the future, maybe you'll just throw it away, but the act of building, exploring and seeing what's possible is very rewarding in itself, let alone the productivity gains from getting a tool that's hyper customized to your use case rather than generic off the shelf stuff.

So I want to give a quick shout out to Tool Hive for supporting the show so I'm able to have conversations like this and please let me know what else you'd like to see, what has inspired you?

What are you building these days?

And if you could like and subscribe, it would really mean a lot.

So thank you and I'll see you next week.